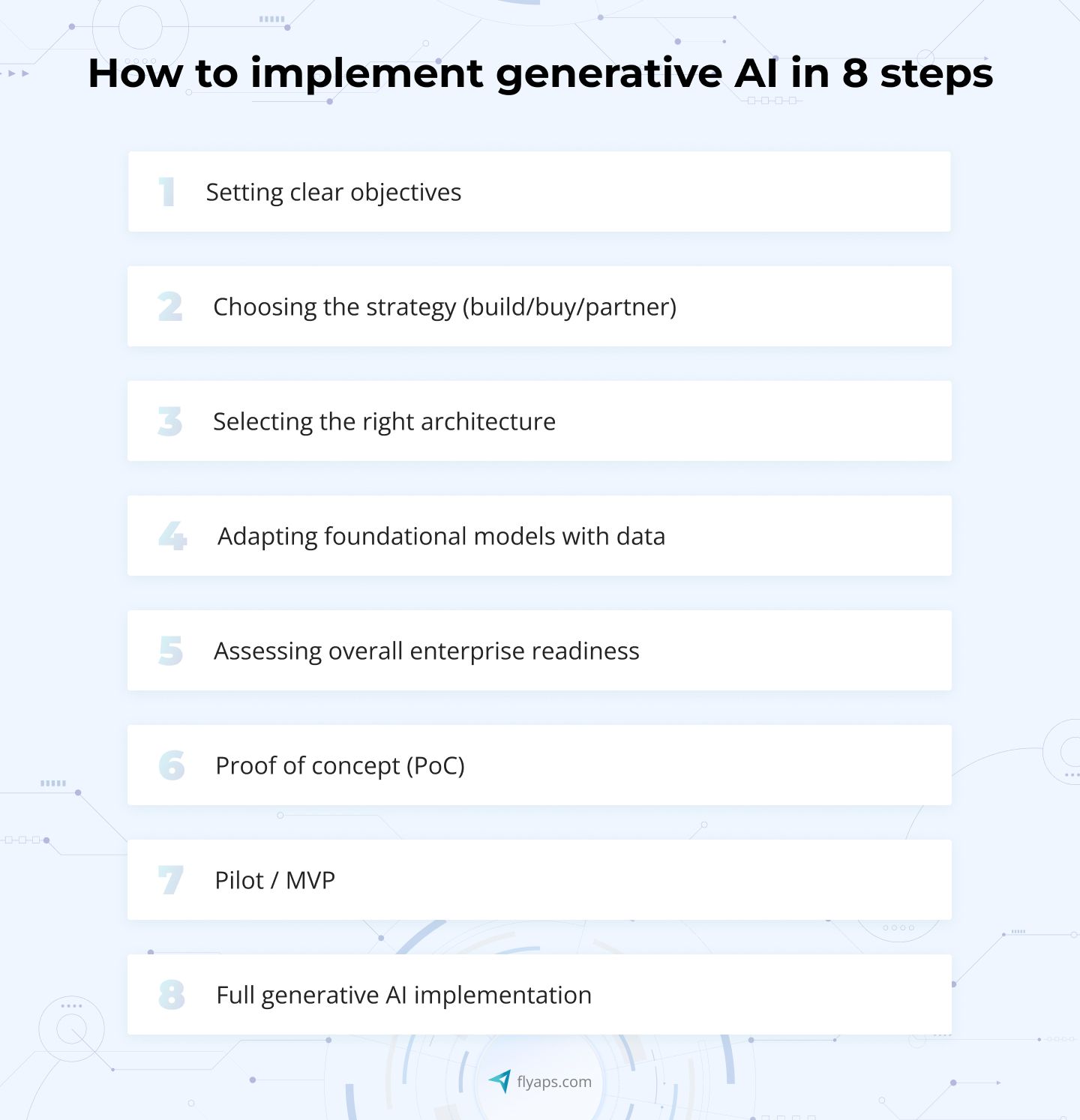

How to Implement Generative AI: Tailored Strategies for Every Scenario

Despite the growing buzz around generative AI tools such as ChatGPT and DALL-E 2, which have become essential across different industries, only 33% of McKinsey survey participants not only thought about how to implement generative AI but actually integrated it.

Budget constraints often hinder organizations from quickly adopting advanced technologies, with even affordable tools requiring staff training. Moreover, the demand for custom generative AI solutions, coupled with the high costs of developing such tools exacerbate hesitancy to adopt new technology.

Nevertheless, a significant number of forward-thinking companies are developing strategies to quickly incorporate this technology, which unlocks immediate advantages and yields significant benefits. For example, Vodafone has used generative AI as a virtual assistant in software engineering, resulting in impressive productivity gains of 30% to 45% during trials with around 250 developers.

We at Flyaps have extensive expertise in artificial intelligence and a track record of successfully delivering AI projects. These include CV Compiler, GlossaryTech Chrome extension, and the like. We carefully monitor instances where generative AI has been effectively implemented. In this article, we delve into how to implement generative AI with 8 proven steps and insights that will allow you to prepare for each scenario you may encounter.

Step 1. Setting clear objectives

For this guide, let’s imagine a financial advisory company looking to revolutionize its customer support using generative AI. Instead of immediately diving into the intricacies of AI models or data privacy, the CEO, let it be Sarah, initiates the process by setting clear business goals.

The first step in generative AI implementation involves brainstorming potential objectives. Objectives such as reducing response times by 30%, increasing customer satisfaction scores by 20%, and handling a 20% surge in customer inquiries without compromising service quality and so on would serve nicely if you’re about to implement generative AI. For example, Octopus Energy managed to increase customer satisfaction ratings by 18% by using generative AI to create detailed email responses quickly with clear objectives in place.

In our case, to prioritize her company goals, Sarah collaborates with her team and they collectively decide that reducing response times is the most immediate and impactful goal. By focusing on this clear business objective, her company sets the stage for a targeted generative AI implementation. Suppose they opted for implementing a chatbot that answers transactional questions, provides personalized investment advice, and guides users through financial planning processes is the best way to achieve the main objective.

Next, Sarah and her team create a roadmap to help them select the right technology and ensure data security.

Step 2. Choosing the strategy (build/buy/partner)

Now that our imaginary company has set clear business objectives for their generative AI implementation, they have to lock on the strategy — whether to build, buy, or partner to bring their vision to fruition. Here’s a quick breakdown.

Building strategy

A building strategy involves creating an in-house infrastructure and assembling a team of experts in machine learning, natural language processing, and conversational design. This approach provides total control over the generative AI product but demands significant time, resources, and expertise. Leveraging pre-built solutions available through AI development platforms can significantly accelerate deployment while reducing complexity.

If a business depends on generative AI for a vital aspect of its operations, like Bloomberg and their BloombergGPT, then developing an in-house solution becomes very important. BloombergGPT is a significant system that operates with 50 billion parameters, demonstrating the financial firm's drive to collect and analyze data to create tailored information. A mere integration of ChatGPT wouldn't be enough; Bloomberg decided to invest significantly in its own solution. This move doesn't just give customers a first-to-market edge, it also strengthens the company's position by adding extra barriers around its unique tech approach, creating a major competitive advantage.

Buying strategy

For Sarah's team, a buying strategy for their generative AI needs is probably the best fit. Buying strategy means that the AI technology isn't something that defines or sets them apart as a company. It's rather a tool the company needs to stay competitive in the market.

For a financial advisory company like Sarah’s, having a generative AI-powered chatbot can make their customer support more efficient. However, as mentioned, it's not the unique or defining feature that makes them stand out. But the good thing about this strategy is that one can buy a pre-built AI solution from an external vendor, without having to build it from scratch.

Partnering strategy

This generative AI strategy involves collaborating with or acquiring companies outside of the organization. This approach is useful when a required solution is in line with how the company positions itself but lacks internal resources. Partnerships can provide ready-made tools, expertise, and potentially a customer base, facilitating rapid scaling.

Look at Microsoft. Being a 49% owner of OpenAI, the company used OpenAI’s technology in Office and Github, which helped them to gain a competitive advantage over Google at least for now.

Whether Sarah decides to build her own generative AI product or pick another strategy, one thing is certain — she needs a team of reliable AI engineers for her endeavors. At Flyaps, we know how to build from scratch, customize solutions to your needs, and seamlessly integrate gen AI into your existing setup. If you, like Sara, are on your way to embracing generative AI, our experienced technical specialists will have your back.

Step 3. Selecting the right architecture

At this point, Sarah’s team understood they didn’t have internal tech specialists experienced in generative AI projects. Therefore, they reach an AI-focused software development company to help them embark on the next phase of their generative AI implementation.

With the business objectives and strategy clearly defined, the next crucial step for our Sarah and her company venturing into generative AI implementation is determining the most suitable architecture for their chatbot.

Even if Sarah is adopting a buying strategy for generative AI implementation, she needs to select a foundational model, because she's essentially getting pre-built or off-the-shelf AI solutions from external vendors. These solutions are often built upon foundational models that serve as the basis for generative AI technology. Therefore, she still needs to make informed decisions about which model aligns best with her goals and requirements when adopting a buying strategy.

But let’s assume Sarah couldn’t find a perfect pre-built solution and the budget allows her to build from scratch. So, she and the tech team gathered to consider such things as foundation models. They are models that even being pre-trained still are constantly learning from data. GPT-4, DALL·E 2, BERT, CLIP, LaMDA, Stable Diffusion, and PaLM are the most common foundation models for generative AI deployment.

There are two principal approaches at their disposal — opting for a “full control” model hosted on their own public cloud or accessing generative AI as a managed cloud service from an external vendor, which promises speed and simplicity.

Step 4. Adapting foundational models with data

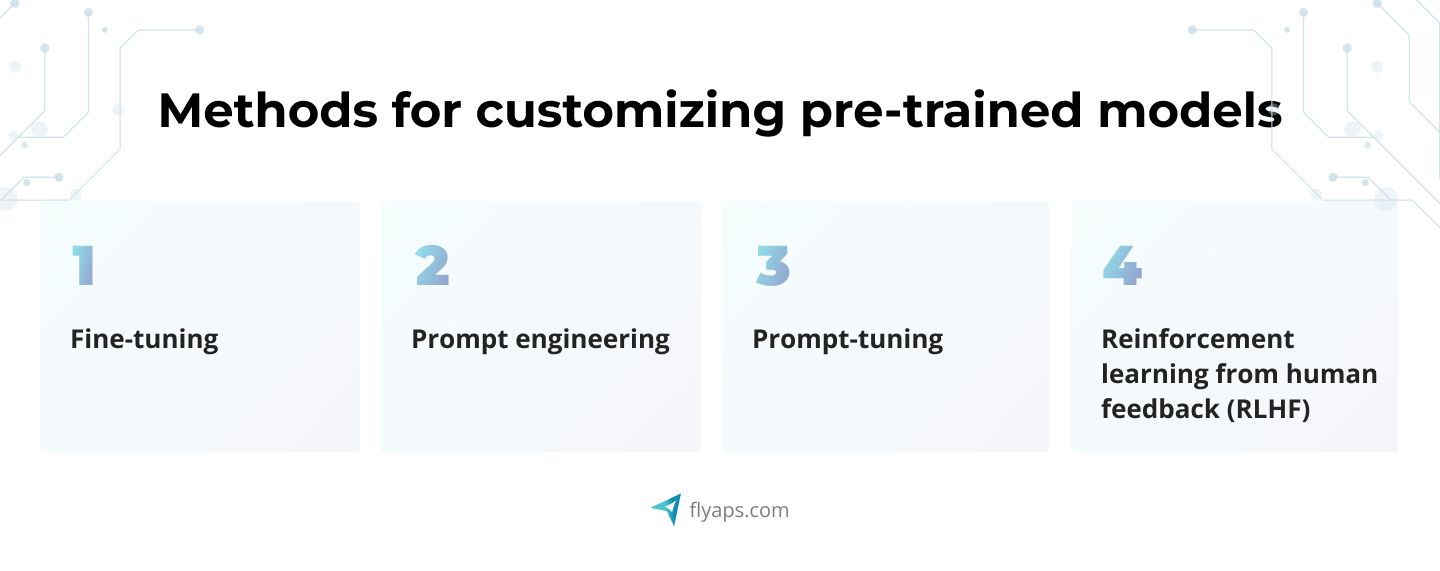

As data is crucial for any AI project, especially generative ones, tech specialists have to look for ways of adapting pre-trained models they decided on during the third step with their proprietary data. There are four main methods for customizing their pre-trained models:

1. Fine-tuning

Fine-tuning involves updating the parameters of a pre-trained model with additional labeled data (data that has clear indications or annotations) and adapting it for specific tasks without starting from scratch. Although pre-training knowledge is retained, there is a risk of overfitting, which can make the model overly specialized in one area and potentially hinder performance in other tasks.

In Sarah's case, suppose the aim is to give personalized suggestions regarding investment prospects. The business provides labeled financial data to supplement the dataset, thereby refining the pre-existing model to provide specific investment advice.

However, the model might become too focused on financial advice and struggle with more general financial queries. Therefore, improving certain financial guidance may decrease the chatbot's capacity to handle a variety of financial inquiries.

There are three proven fine-tuning strategies:

- Updating embedding layer(s): Refines layers converting input into meaningful representations.

In our example, this would involve refining the chatbot’s components responsible for processing and interpreting financial data. In case the aim is to improve how the chatbot understands and interprets diverse financial queries, updating the embedding layer allows for a more nuanced and accurate representation of financial information.

- Updating language modeling head: Targets components generating output predictions. Targeting components generating output predictions involves refining the aspects of the chatbot responsible for providing responses. For instance, if the chatbot has to offer more precise and personalized investment recommendations, updating the language modeling head ensures improvements in generating accurate and contextually relevant responses.

- Updating all parameters: Comprehensive strategy involving refining the entire model. In the finance advisory context, it means fine-tuning all aspects of the chatbot, from input processing to output generation, to achieve a holistic improvement in performance.

2. Prompt engineering

Prompt engineering in gen AI implementation that entails customizing a frozen chatbot model for specific tasks by manually crafting text prompts. This method enables the gen AI-based solution to perform various financial tasks without undergoing extensive fine-tuning.

In case our finance advisory firm wants the chatbot to seamlessly handle tasks like providing investment insights, budgeting advice, and tax planning. Through prompt engineering, they can design text prompts that guide the chatbot's responses in these diverse financial areas.

Using a single frozen model across multiple financial tasks eliminates the need for storing separate tuned models. This simplifies the deployment of the chatbot for various purposes, promoting efficiency and versatility.

However, it's important to note that prompt engineering demands manual effort in designing effective prompts. Additionally, the model performance may not reach the level achieved by fine-tuned models tailored specifically for each task.

3. Prompt-tuning

Prompt-tuning optimizes the performance of a solution for specific tasks by treating prompts as adjustable parameters. This method ensures efficient use of resources while enhancing output quality.

In case Sarah’s company is looking to optimize the chatbot's responses for tasks such as portfolio analysis and risk assessment they can achieve task-specific efficiency without the extensive resource demands of fine-tuning the entire model by fine-tuning prompts as tunable parameters.

This approach strikes a balance between conserving resources, delivering higher-quality outputs, and reducing manual effort.

4. Reinforcement learning from human feedback (RLHF) for human-aligned models

In our imaginary case, RLHF aligns the language models of the chatbot with human values by leveraging human feedback. This process involves training a reward model and fine-tuning the model using reinforcement learning to optimize performance based on the feedback received.

The adoption of this approach is happening in three steps:

- Pre-training a language model (LM): Uses an existing model as a starting point, which in the finance context could be a well-established financial language model.

- Gathering data and training a reward model (RM): Involves collecting human feedback to create a reward dataset specific to financial interactions, ensuring the model learns from real-world financial scenarios.

- Fine-tuning with reinforcement learning (RL): Optimizes the chatbot's model parameters to maximize rewards based on the reward model, refining its performance in alignment with user preferences.

Step 5. Assessing overall enterprise readiness

Before delving into gen AI adoption, our finance advisory firm must evaluate their integration and interoperability frameworks. Ensuring seamless integration with existing systems and interoperability across platforms is fundamental for a cohesive implementation.

The security and safety of foundation models take center stage. Sarah and her team must assess the robustness and safety protocols of the chosen foundation models, ensuring they align with industry standards and best practices.

The adoption of gen AI introduces a fresh urgency for a robust and responsible AI compliance program. Adhering to laws, regulations, and ethical standards is not only a legal necessity but a foundational step in building a secure and reliable AI infrastructure.

Administering controls to assess the potential risks of gen AI use cases at the design stage is a proactive measure. Identifying and mitigating risks early in the implementation process contributes to a more resilient and risk-aware AI foundation.

Step 6. Proof of concept (PoC)

Before diving into full-scale implementation, we recommend opting for such a crucial part of the generative AI strategy as proof of concept (PoC). This small-scale experiment allows you to assess the technical feasibility of integrating generative AI into their operations. Despite concerns about prolonging the project timeline, the benefits of a PoC outweigh the drawbacks.

- Technical viability check: The PoC serves as a technical litmus test, validating if the idea of using generative AI aligns with the firm's goals and requirements.

- Cost-effectiveness: PoCs are relatively cheap (averaging between $15k and $20k) and carry minimal risk. This cost is a small investment compared to the potential benefits of a successful gen AI implementation.

- Risk mitigation: The PoC acts as a risk mitigation strategy, allowing you to identify challenges early on. If the PoC fails, the project can be dropped, saving both time and money.

- Complexity consideration: The complexity of the intended implementation makes the PoC even more crucial. The more intricate the gen AI integration, the more valid it becomes to build a proof of concept.

Some key phases of a PoC include:

- Data collection: Gathering data for training and testing the model is a fundamental part of the PoC, ensuring the model has the necessary information to perform effectively.

- Algorithm exploration: Exploring and selecting appropriate generative AI algorithms is integral during the PoC stage to align the technology with the firm's objectives.

- Development environment setup: Setting up the development environment is a preparatory step for building and testing the prototype model.

- Prototype model building: The actual construction and testing of the prototype model take place during the PoC, providing tangible insights into the gen AI's potential.

- Stakeholder feedback: Gathering feedback from stakeholders and users is crucial for refining the gen AI solution based on practical insights.

- Hypothesis verification: The PoC's ultimate goal is to verify the hypothesis of using generative AI. The assessment of results guides the decision to continue, iterate, or discontinue the project.

The PoC phase is a strategic step that allows our finance advisory firm to test the waters before committing to a full-scale implementation of a gen AI-powered chatbot.

Step 7. Pilot / MVP

Once our finance advisory firm is confident in moving forward after validating the hypothesis through PoC, it's time to start the actual implementation phase. Unlike the PoC, which focuses on technical viability, the minimum viable product (MVP) serves the purpose of delivering a functional version of the gen AI-powered chatbot.

Key stages of the MVP step:

- Refining the model: Continuous refinement of the gen AI model is a key aspect during the MVP stage. This involves making improvements to enhance the model's performance and capabilities based on real-world usage.

- Data collection expansion: If needed, the firm may expand data collection efforts during the MVP stage to further enrich the model's understanding and responsiveness.

- UI development: Crafting a user-friendly and intuitive interface is crucial. UI development ensures that users can interact seamlessly with the gen AI chatbot.

- Integration with existing systems: Integration with the firm's existing systems is undertaken, ensuring that the gen AI chatbot harmoniously aligns with established workflows.

- Regulatory compliance: Ensuring that the designed solution complies with relevant industry regulations and data privacy laws is paramount for a finance advisory firm dealing with sensitive financial information.

- User feedback gathering: Actively seeking user feedback is integral during the MVP stage. It allows for adjustments based on user experiences and preferences.

- Fine-tuning and performance optimization: Ongoing fine-tuning and optimization efforts are conducted to enhance the gen AI chatbot's overall performance.

Goals and success metrics

The specific goals of the MVP are defined and specified at the project's outset, aligned with success metrics. These objectives aim to confirm whether the gen AI chatbot effectively solves the identified business problem. User validation of this hypothesis during the MVP stage indicates readiness for full implementation.

In essence, the Pilot/MVP phase serves as the bridge from experimentation to practical implementation, ensuring that the gen AI-powered chatbot not only functions technically but delivers tangible value to users and aligns with the business problem at hand.

Step 8. Full generative AI implementation

Having successfully navigated the Pilot/MVP step and garnered positive user feedback, Sarah and her firm are now ready for the culmination of their generative AI journey — full implementation. This phase involves transitioning from a prototype to a fully operational gen AI solution, aligning with the business goals defined in the first step.

How Flyaps can help you with generative AI implementation

It's been over a decade since we began helping companies across industries adopt cutting-edge technologies, including AI. If your case is similar to Sarah’s, let's explore how we can leverage our expertise to take your business into the innovative realm of generative AI.

Generative AI consulting

Our team of experts will conduct in-depth analyses of your organization's unique needs and challenges, providing strategic guidance and identifying key areas across industries where generative AI can have the greatest impact.

Tailored development

Given the cutting-edge nature of generative AI, we understand its nuances across multiple applications. With a wealth of experience in various AI technologies, we will create a solution that aligns with your business goals and existing infrastructure, regardless of your industry.

Seamless integration

Generative AI isn't a standalone solution — it requires careful integration with your current systems. Our experienced team ensures a seamless integration process so that generative AI applications work in harmony with your legacy systems and other software tools.

Still have questions on how to implement generative AI into your business? No worries — we've got the answers! Reach out to us and our experts will handle all the technical details for you!