Prompt Engineering Tools: Things You Must Know to Gain Maximum Value From Gen AI

When ChatGPT was introduced to the public, everyone got instantly hooked. But soon it became obvious that the tool itself isn’t of great help if you don’t know how to use it. The same applies to any Gen AI solution, whether it’s an image generator or a code-writing tool. Using GitHub Copilot to write code in seconds sounds impressive but without good prompts, it is only a pipe dream.

That’s when prompt engineering tools step in. At Flyaps, we have hands-on experience building generative AI solutions for our clients. We also experiment with different generative AI use cases in our company, creating various AI prompts and seeing how they improve our work processes.

In this article, we’ll share what we learned after experimenting with different large language models (LLMs) and prompt engineering software, as well as how to write prompts that AI perfectly understands.

But before diving into the topic, let’s first settle on what exactly prompt engineering is.

Is prompt engineering a skill or a profession of its own?

Prompt engineering is an umbrella term that refers to training processes behind language models. Developers create an input (prompt) that goes into large language models or gen AI tools (ChatGPT, Gemini, Microsoft Copilot). The prompt is later fine-tuned to improve the output accuracy.

Is prompt engineering a skill that developers dealing with language models must have or a new profession?

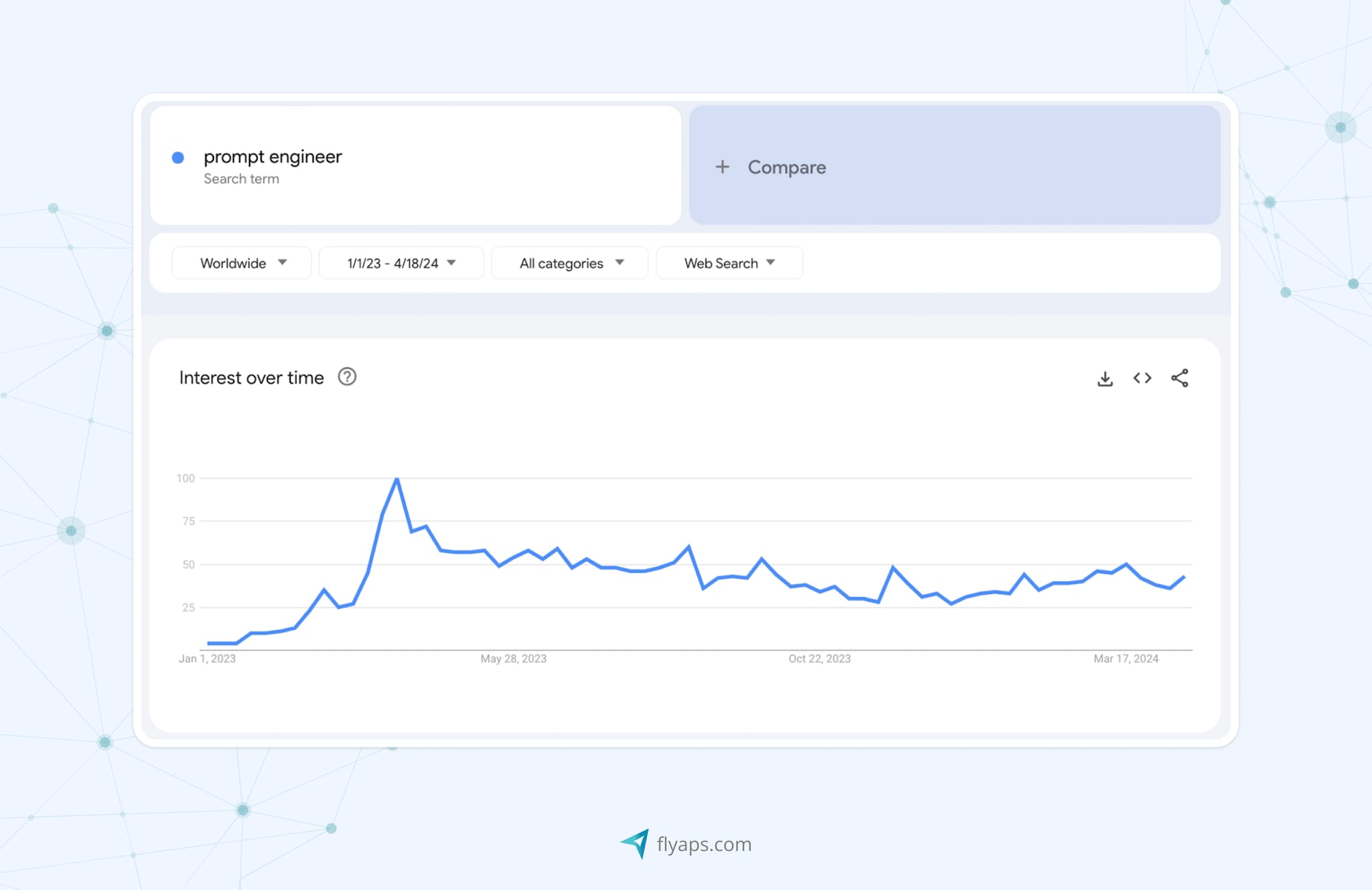

When a new technology emerges, it often creates new professions. This once happened with the rise of crypto and NFTs, where we saw the emergence of roles such as blockchain developers, crypto analysts, NFT artists, and so on. Similarly, with the wave of AI, we're witnessing new roles focused on machine learning, data science, AI ethics, and, of course, prompt engineering and prompt engineering software. But will these new jobs stand the test of time?

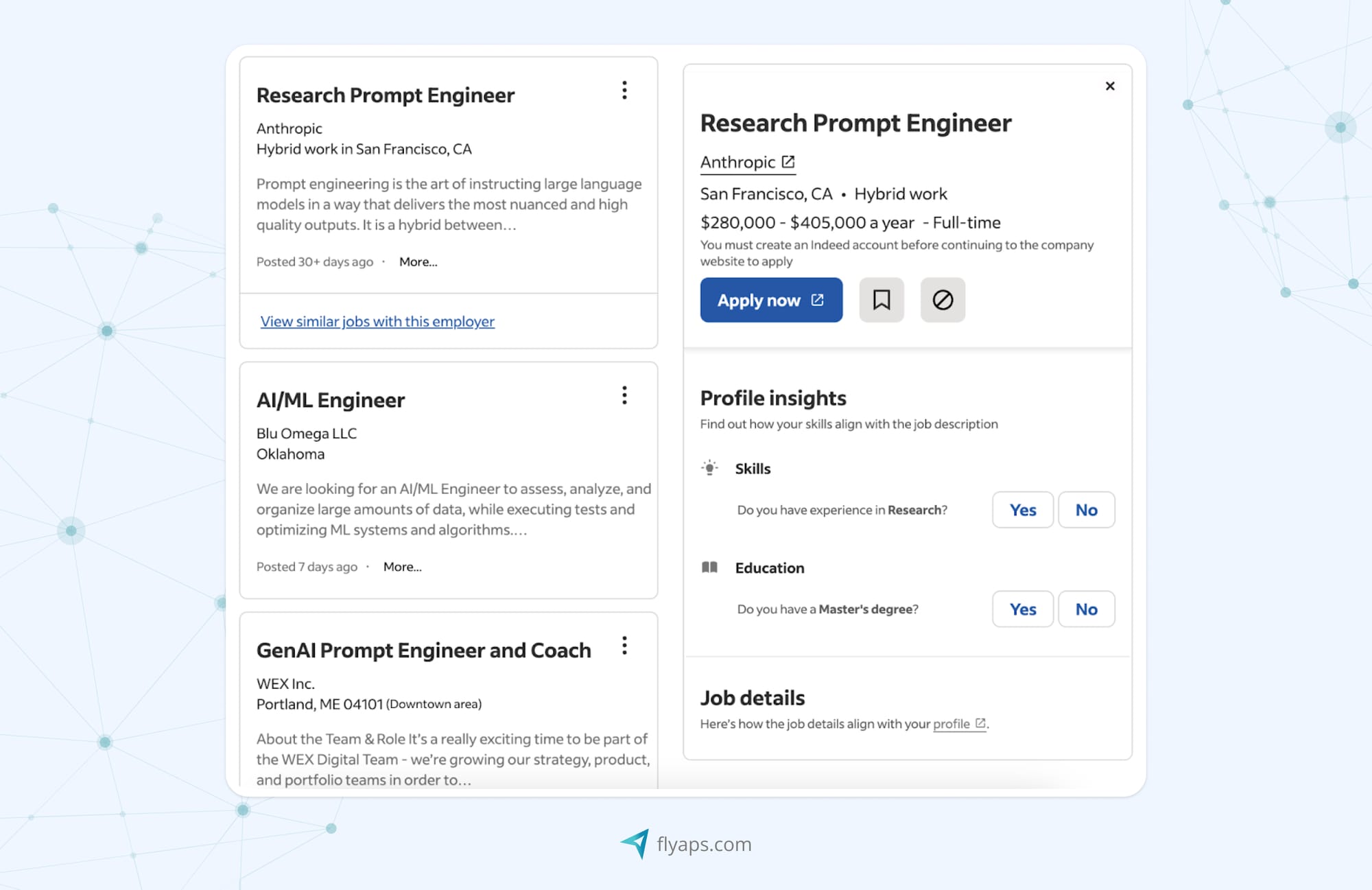

Job platforms such as Indeed and LinkedIn reflect this growing demand, with thousands of prompt engineer job listings in the US alone. Salaries for these roles span from $50,000 to upwards of $150,000 per year.

The skills listed in these postings usually include technical proficiency in Python and data structures, experience in machine learning, natural language processing and large-scale model training.

Gen AI models are definitely going to stay and companies are in a rush to find experts that could train them according to their needs. But whether it’s going to be a profession of its own in a year or two is an open question. From our perspective, it’ll most likely become one of the must-have skills for developers and other key generative AI roles working with AI tools.

“Does an AI developer require a prompt engineering skill? Yes and no: it depends on their tasks. If they work with LLMs, then definitely yes. Without this skill, they won’t get the results they want.”

– Alexander Arhipenko, CTO at Flyaps

Check out our skills and let’s find the right approach together.

Let’s collaborateWorking with LLMs comes with a number of challenges. Developers need to be familiar with prompt engineering tools and prompt engineering frameworks to navigate these complexities. Here are some of the challenges that a developer working with AI models needs to be aware of:

Variability of model outputs

- One of the primary challenges in working with language models is their inherent variability. A single prompt can yield a range of different outputs, so developers must train the AI model to always stick to one specific answer without trying to generate a brand-new output every time.

Specifics of different LLMs and clouds

- Every AI model functions differently and to use them efficiently developers must familiarize themselves with various language models, cloud platforms, AI development platforms and strategies.

Ensuring precision and compliance

- Accuracy and precision are paramount, especially when it comes to compliance with regulations like GDPR. A slight deviation or mistake in the output can have significant implications, so a prompt engineer needs to ensure that the model doesn’t invent any information and produces only highly accurate responses compliant with relevant laws and standards.

Continuous updating and verification

- Language models constantly evolve as the amount of company data they rely on keeps increasing. Prompt engineering is needed to train the models to get accurate and relevant outputs because local data grows and previous information becomes no longer relevant.

To sum up, as LLMs continue to grow data-wise and more businesses are looking to integrate AI into their apps, prompt engineering will soon become a must-have skill for any AI/ML engineer. But what exactly does a good prompt mean and, vice versa, what makes a bad prompt?

Good prompt vs a bad prompt

To put it simply, a good prompt is a clear roadmap for the model that specifies the desired output while also eliminating the chance of irrelevant outputs. It sets clear expectations and boundaries for the language model, ensuring that its answer aligns with the intended purpose.

“A prompt is similar to a programming language. In programming, a developer has a few options for writing a command, but with generative AI, the range of possible prompts is endless.”

– Alexander Arhipenko, CTO at Flyaps

This is why specifying constraints is crucial when writing natural language prompts. For instance, if you want the model to generate text with the given list of words in the right order, you need to specify that the model should not add new words. Otherwise, AI models such as GPT-3.5 will invent new words and produce irrelevant gibberish.

Crafting a well-defined prompt takes more than just knowing what you need to get as a result. It’s about effectively conveying the task to the model. This includes using special prompt engineering techniques that offer precise instructions and examples to guide the model toward the desired outcome. And vice versa, if you forget to mention that the model can’t invent elements to complete the task, it surely will do it because it was trained to generate as much content as possible.

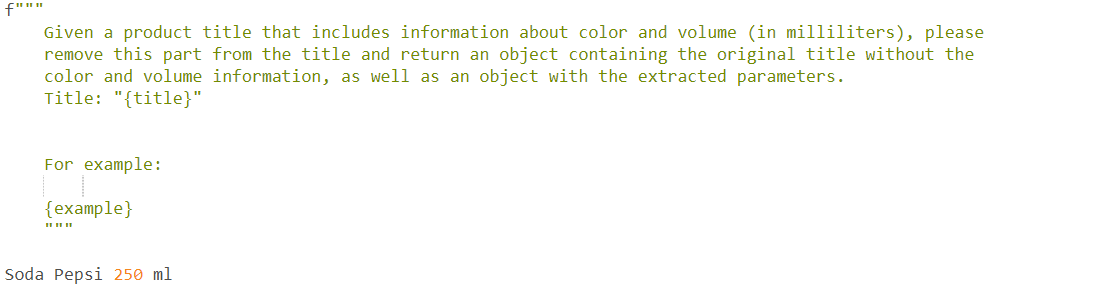

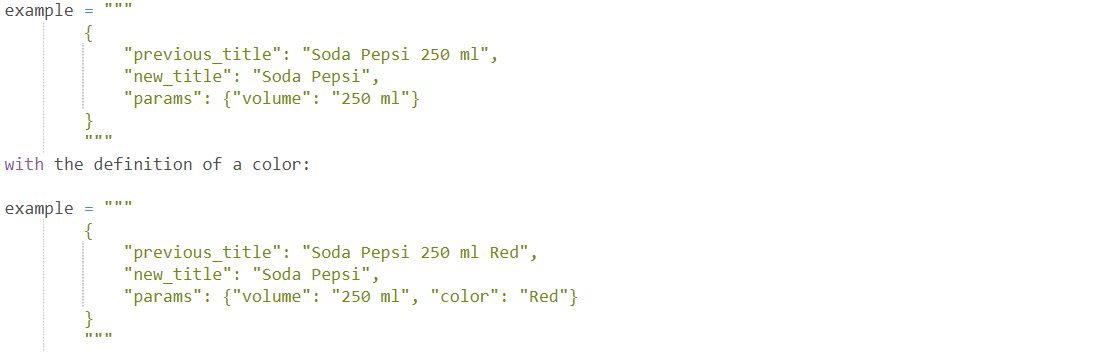

To illustrate a good prompt, let's consider a recent e-commerce project our team has worked on. Our goal was to categorize a large number of products by titles and specific parameters such as color and volume. To streamline this process, we integrated the product database with ChatGPT-3.5 and wrote a prompt that the model could understand.

We described the task in detail and inserted an example of the desired output:

Here’s the result the model offered:

Thanks to the detailed task description and the example provided, the model correctly understood the task and classified the extensive database, standardizing parameters like volume and colors.

To sum up, here’s what differentiates a good prompt from a poorly written one:

| Good prompt | Bad prompt |

| Clearly states the desired output without unnecessary politeness. | Vague and ambiguous, lacks clear instructions on what is expected. |

| Specifies constraints to guide the model's behavior. | Allows the model to make irrelevant assumptions. |

| Includes examples to illustrate the desired outcome. | Makes it challenging for the model to understand what you want. |

| Provides context or leading questions. | Fails to guide the model towards the correct output. |

In essence, writing a good prompt is about clearly specifying the desired outcome while also mentioning what the model shouldn’t do. Here’s how we find this sweet spot at Flyaps.

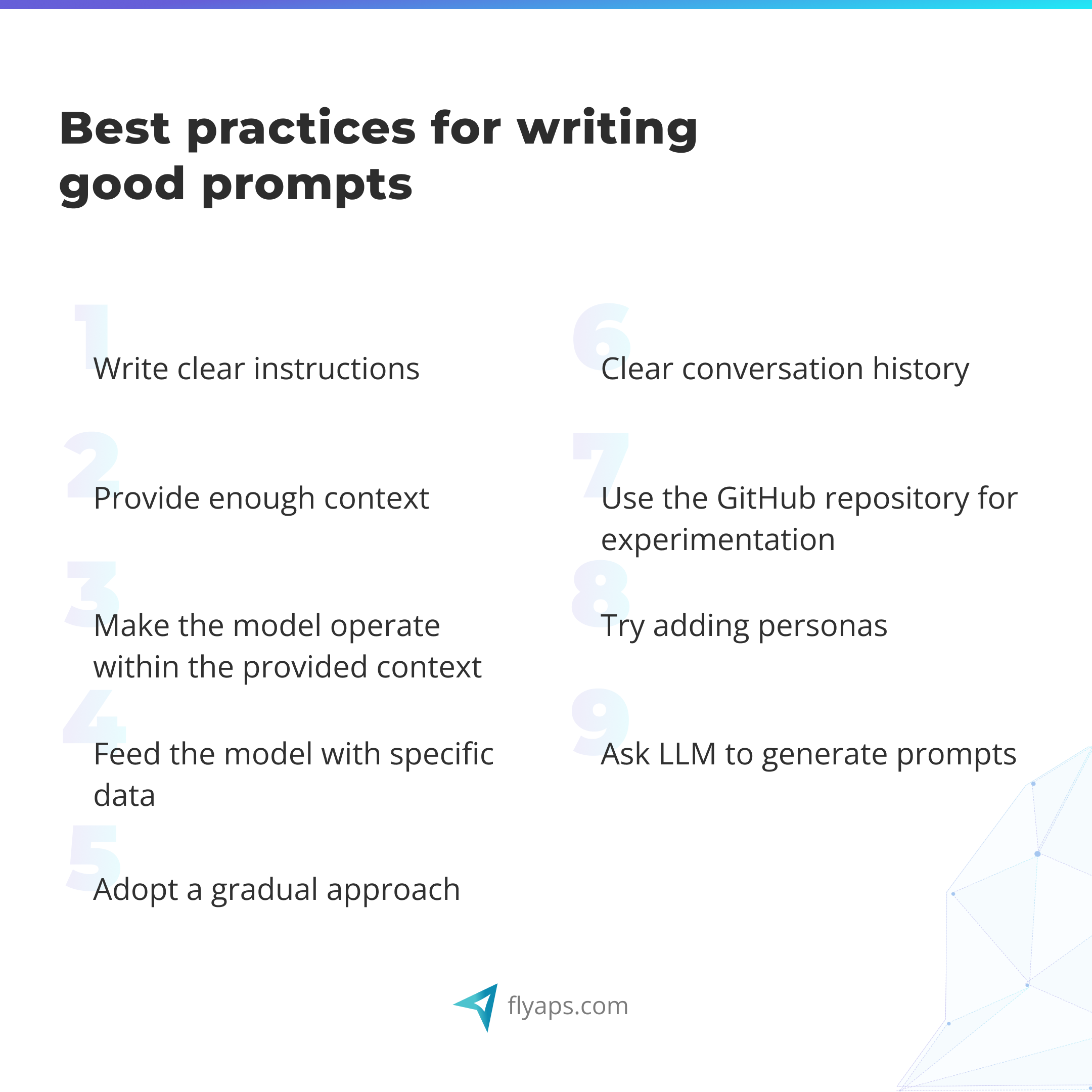

Best practices for writing good prompts

Writing effective prompts is both an art and a science. Here are some tips to help you craft prompts that bring accurate, relevant, and valuable outputs.

1. Write clear instructions

The instructions in your prompt creation process should be clear and direct, leaving no room for ambiguity. Avoid using phrases like "please" or "could you": AI systems aren’t humans, so dry instructions are the best way to communicate with them. Be explicit about what you want the model to do. For example, instead of writing "Could you please provide some information on prompt engineering?" input "Provide an overview of best practices for writing good prompts." This helps ensure prompt quality.

2. Provide enough context

Context is key to guiding the model towards the desired output. This might include examples, constraints, or background information. The more context you provide, the more accurate and relevant responses the model will generate. Here's an example of a prompt for writing unit tests:

“You are a software developer tasked with writing unit tests for a new login authentication module in a web application. The login module handles user authentication using email and password credentials. Your goal is to ensure that the login functionality works correctly, handles edge cases, and maintains security standards.”

Including precise context in LLM prompts improves output quality by defining boundaries.

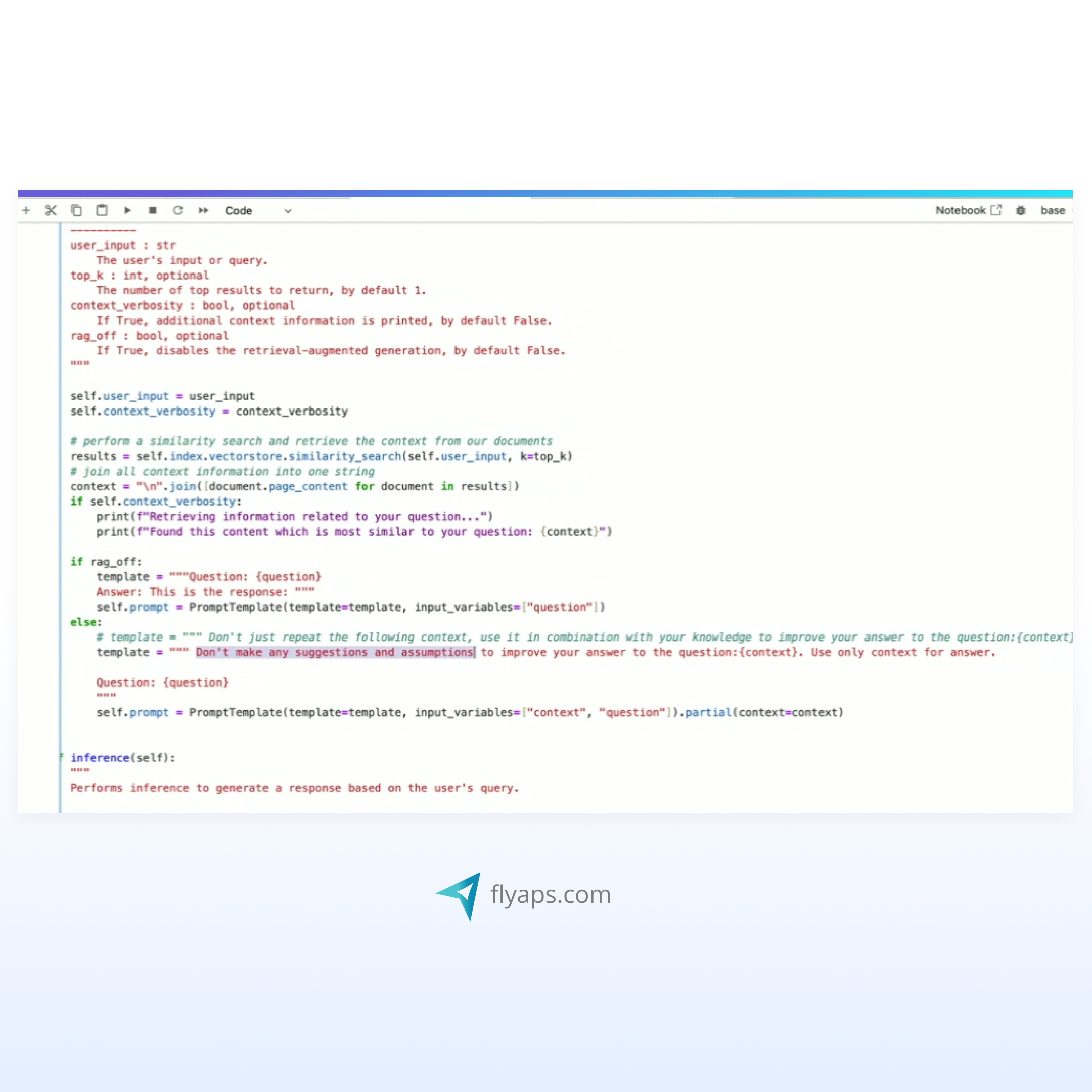

3. Make the model operate within the provided context

The model must operate strictly within the provided context. Generative AI tools like GPT-3.5 are incredibly versatile and generate content on their own, leading to irrelevant outputs or hallucinations. Set clear boundaries in your prompt development process so the model stays focused on the task. For instance, for retrieving information from an internal knowledge base, add “Don’t make any suggestions or assumptions” to prevent incorrect AI-generated outputs.

4. Feed the model with specific data

When building your own gen AI systems, feed the model with specific data to improve the prompt performance. This might include product names, numbers, or information related to your business. This way, the model will have enough information about your business to generate more precise outputs without any room for errors.

5. Adopt a gradual approach

Instead of applying a single-shot prompt approach when the model is expected to get the best answer on the first try, we recommend starting with a general prompt and engaging in a conversation with the solution, adding details and more context on the way. This gradual approach allows you to refine the prompt based on the model’s responses, leading to better and more accurate outputs over time.

6. Clear conversation history

If you find that the model is hallucinating or producing irrelevant outputs, consider clearing the conversation history and starting over. Some AI platforms like OpenAI GPT-3 API offer the ability to clear history, allowing the model to start fresh. This can be especially useful when the model gets lost in the answers and needs a reset.

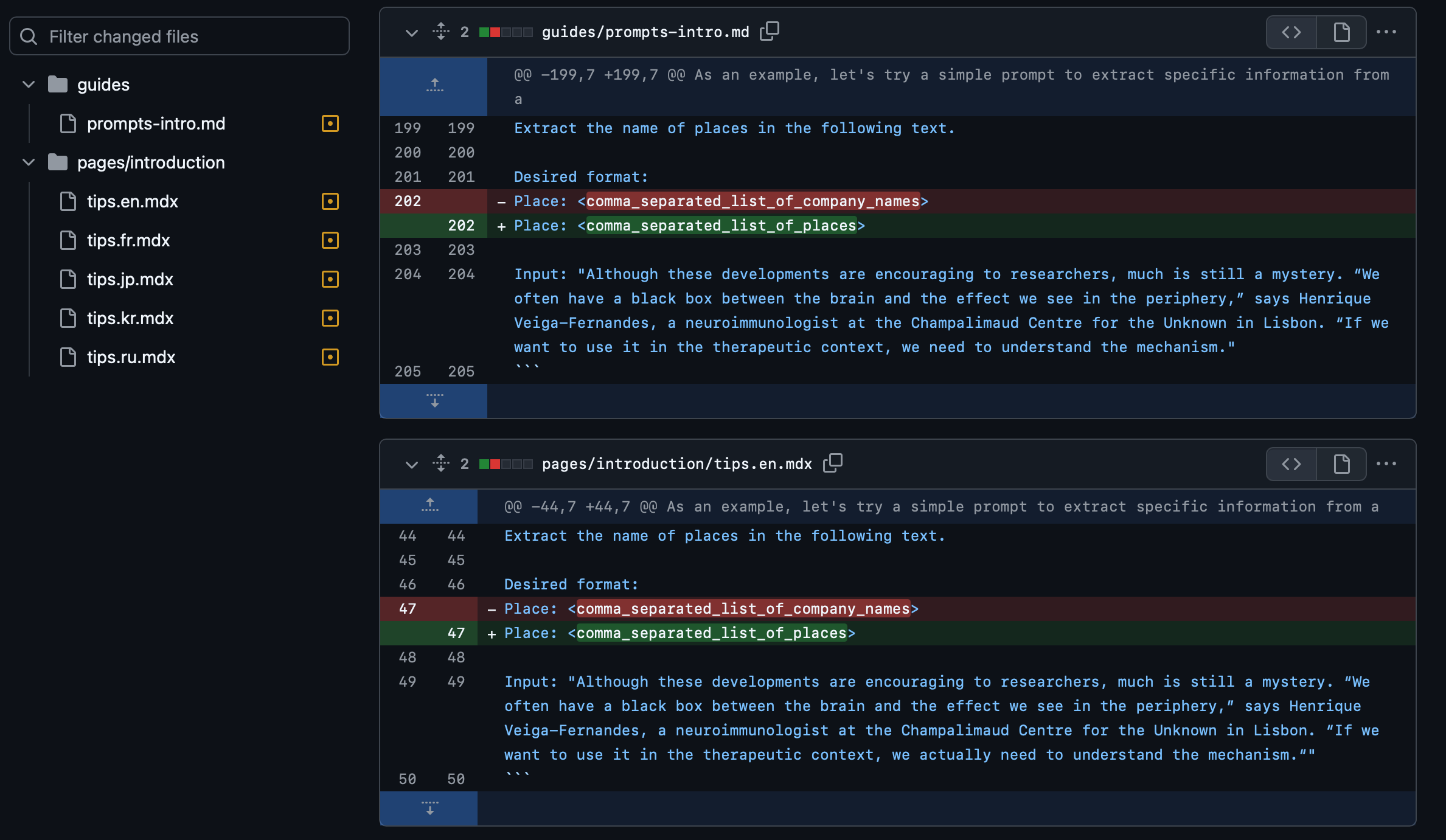

7. Use the GitHub repository for experimentation

The GitHub repository of prompts can be of great help to try out different prompts and track their performance. GitHub repositories are designed for specific models such as ChatGPT, or consist of general prompt templates, guides, and prompt engineering techniques for testing. Whether you're testing python code or automatic user interface generation, GitHub can be a rich resource for developing and tracking specific prompts.

8. Try adding personas

Add personas to your prompts to tailor the model’s responses to a specific audience. For example, you could ask the model to explain something as if it were talking to a child for a simpler answer or specify that the model is an expert in a specific domain to get more detailed and technical responses. You can also specify the style, job position, age, or any other necessary context to tune the model’s output.

9. Ask LLM to generate prompts

Open two separate chat windows or AI platforms, for instance, OpenAI's ChatGPT for one chat and another general AI platform for the second chat.

In the first chat window with GPT-4 or another LLM, ask it to generate a series of prompts. These prompts should be varied in nature to elicit different types of responses. For example, "Create a prompt outlining the different types of functions in programming" or "Write a prompt that breaks down the various categories of functions in programming".

Take the prompts generated from the first chat and insert them one by one into the second chat window of the same or another AI solution. Then analyze the responses to see how AI interprets and expands upon the prompts generated by the LLM. Look for patterns, creativity, coherence, and any unexpected or interesting outcomes.

These practices can help you craft prompts and get the maximum value of AI to improve productivity in numerous ways. Here’s a specific example of how prompt engineering can help you at work.

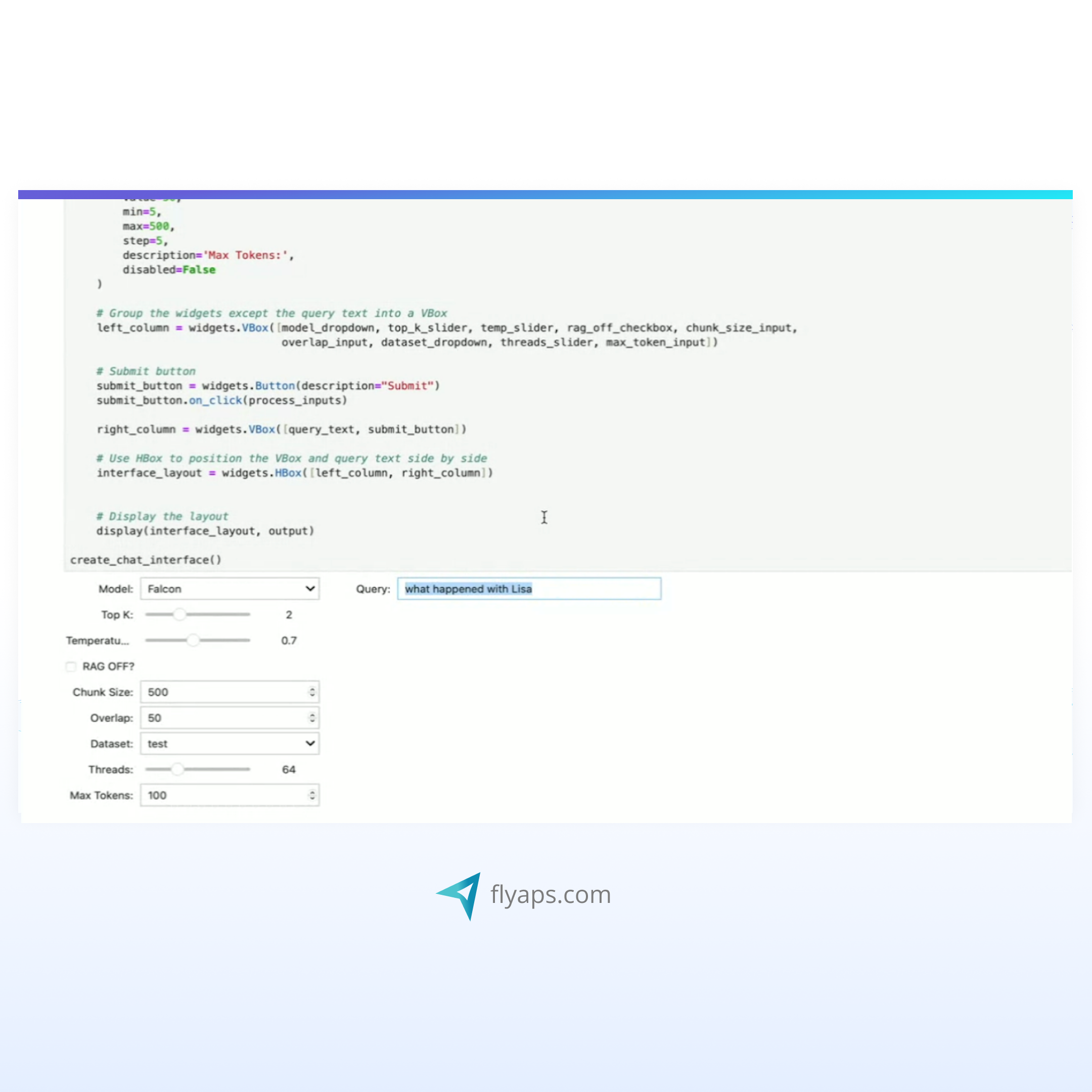

Natural language prompts in action: AI that classifies the company’s knowledge base

Software development companies often grapple with extensive knowledge bases of stored video and audio files, making it challenging for newcomers to understand the project specifics and developers to quickly access the necessary information. Flyaps is no exception here, so we decided to bring the power of AI to solve this pain.

To address this challenge, we implemented an AI-powered chatbot that allows us to find the needed information in the entire database in seconds. With this chatbot, developers can ask any project or company-related questions and get responses complete with links to the original documents and timestamps to video files. The chatbot operates exclusively within our internal knowledge base, ensuring employees have direct access to data.

As with any generative AI solutions, we had to make sure that our chatbot operates only within the internal dataset and doesn’t make things up. This is when prompt engineering steps in to refine the process. Unlike simple input queries, we write specific questions to guide the model towards the desired output. For instance, asking the chatbot to "summarize what happened in Vancouver" might yield a general response. So we have to specify what exact type of information is needed (specific location, witnesses, time, and such) and add constraints so that the model only provides the relevant information.

To further optimize the chatbot's performance, we developed our own search models tailored to our specific documents and datasets. These custom search models enable the chatbot to work more efficiently with our documents, improving the accuracy and relevance of the responses.

AI capabilities combined with prompt engineering is a revolution in productivity. Whether it’s about streamlining the onboarding process or customer engagement, AI increases efficiency in a way that we could never achieve through manual processes and human-only intervention.

Now, can AI models do prompt engineering for you?

As we briefly mentioned, you can experiment with AI to make it perform its own prompt engineering. After all, if AI can generate responses, why can't it come up with prompts as well? However, things are not that simple.

While AI models are incredibly powerful, they lack the critical thinking capabilities that humans have. A machine's understanding begins and ends with the prompt it receives, it doesn’t think or try to understand the underlying context in the same way humans do.

“Writing a prompt is like aiming at a target. Humans can see the target and adjust their aim based on factors like wind speed, distance, and so on. Machines, on the other hand, can’t "see" the target, they shoot based on inserted parameters that describe the location of the target. Those parameters must be set by humans and the result depends entirely on the accuracy of the prompt.”

— Oleksii, developer at Flyaps

While there are AI models like Leonardo AI that can generate prompts themselves based on several keywords, it doesn't work in more complex scenarios. AI models can certainly assist in generating prompts but their relevance won’t be as good without human oversight.

That’s why if you're looking to empower your business operations with AI, it's crucial to trust the job to experts who understand the intricacies involved. While AI can assist in generating prompts, prompt engineering is a process that should be done by humans.

If you're ready to take your business operations to the next level with AI-powered solutions tailored to your needs, trust the prompt engineering experts at Flyaps. Contact us today to learn more about how we can help empower your business with AI.

Check out our skills and let’s find the right approach together.

Let’s collaborateFrequently asked questions about prompt engineering framework

Let's look at some of the most common questions people have about prompt engineering tools.

What is a prompt engineering tool?

A prompt engineering tool is software designed to assist users in creating, testing, and improving prompts for AI models like GPT, BERT, or DALL-E. With its help, developers, data scientists, and AI engineers experiment with different prompts to get more accurate, relevant, and context-aware outputs from AI systems.

What are prompt tools?

Prompt tools are software or platforms that assist users in creating and improving AI prompts for better results. These tools provide interfaces to design prompts, test their effectiveness, and track performance. Examples include PromptPerfect for optimization, and GitHub repositories where users can store, share, and refine prompts collaboratively.

What are prompting tools?

The terms "prompting tools" and "prompt engineering tools" are used interchangeably to describe software or platforms that assist in designing, refining, and managing prompts for new and popular AI models.

What are prompt engineering techniques?

Prompt engineering techniques are methods for creating and optimizing prompts that guide large language models to generate more accurate, contextually relevant, and useful outputs.

What are the three types of prompting engineering?

The most common prompt engineering types include few-shot learning, iterative prompt refinement, constraint usage, as well as prompt decomposition, and zero-shot learning.

Which UI is best for prompt engineering?

The best UI for prompt engineering provides a user-friendly environment with intuitive interface. The best options is when users can get real-time feedback. An ideal interface should support prompt experimentation, performance analysis, and collaboration while making it easy for users to interact with AI models.

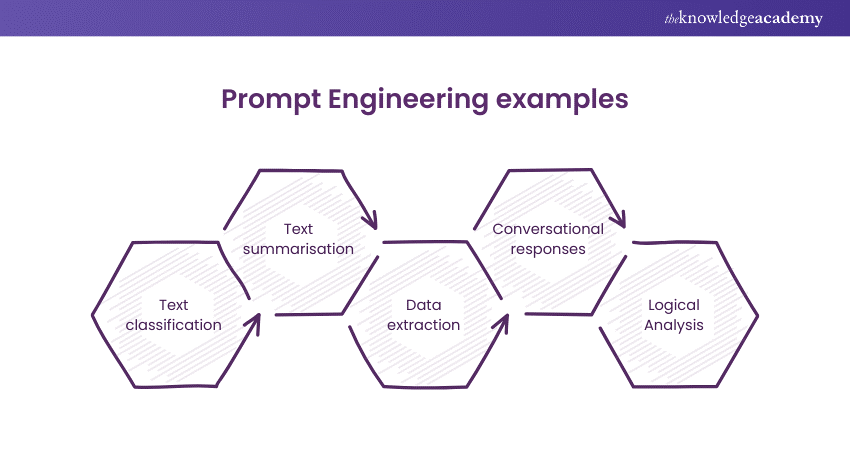

What are prompt engineering examples?

Examples of prompt engineering include email drafting, code completion, the creation of prompts to guide chatbots in customer service, and product description generation.

What is a prompt in software engineering?

In software engineering, a prompt is a directive or input provided to an AI model. It instructs the model to generate specific outputs related to programming or technical tasks. For example, a prompt could ask the model to write a Python function, debug code, or explain a software concept.

What are some examples of PROMPTs?

"Generate a summary of this article," "Write Python code for sorting numbers," "Translate this paragraph into French," "Generate a SQL query to find customers who made a purchase last month," or "Explain blockchain technology to a beginner."

What are prompt engineering frameworks?

Prompt engineering frameworks are systems, libraries, or platforms that help developers design, manage, and optimize complex prompts for AI models. They provide tools for chaining prompts, integrating external data sources, and managing inputs/outputs across different tasks. Examples of prompt engineering frameworks include LangChain, GPT-Neo and PromptPerfect.

Is PromptPerfect free?

PromptPerfect offers both free and paid plans depending on the usage limits and features.