AI Data Preparation: How to Make the Most of Your Data

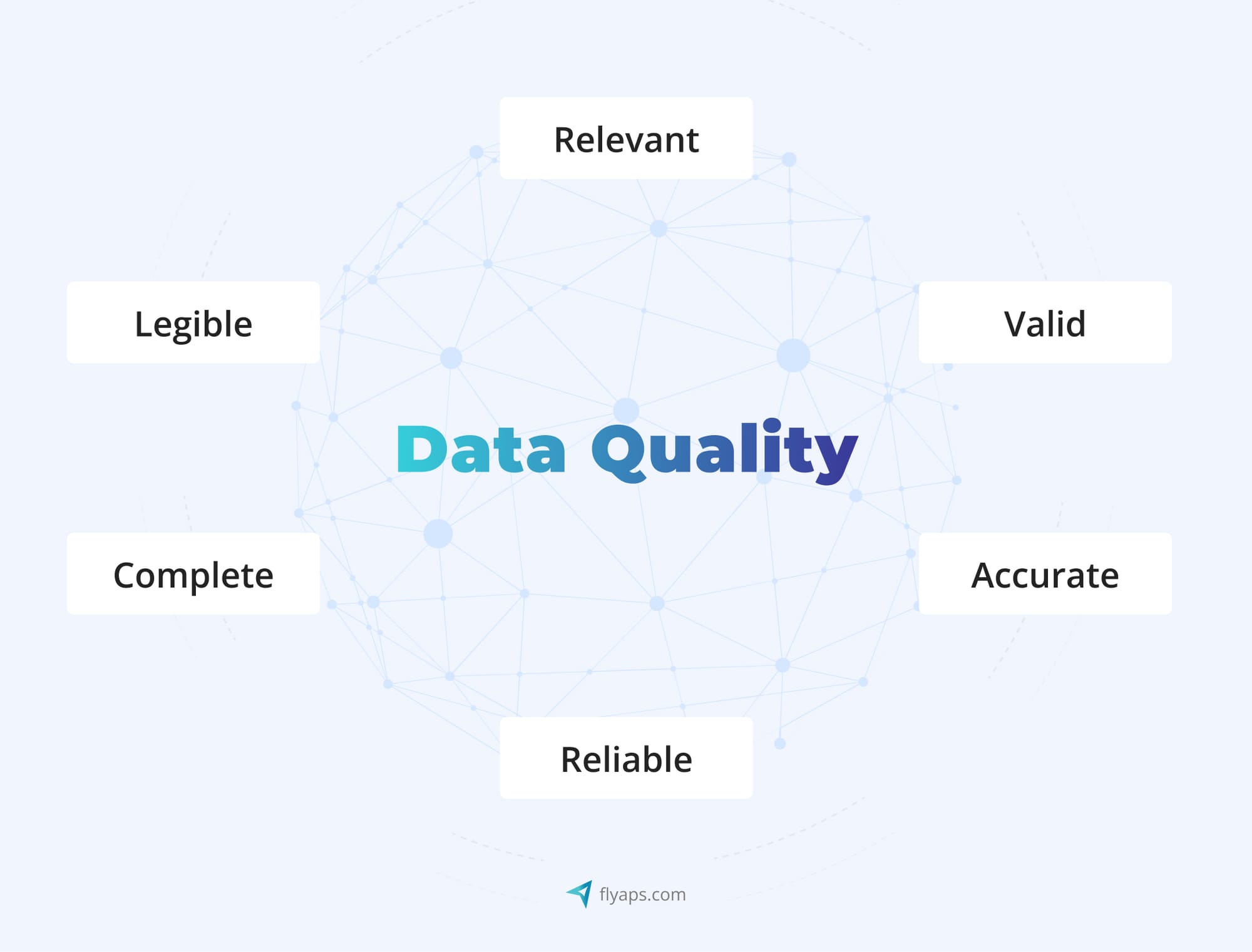

Businesses often expect AI-driven tools to predict trends, optimize operations, and uncover hidden opportunities much faster and with better accuracy. But AI isn’t a magic pill that works instantly. To generate real value, it has to rely on high-quality, relevant, and well-structured data.

And raw data doesn’t clean or organize itself, it’s people’s task. If you feed AI messy, unstructured, or irrelevant data, it won’t produce accurate results. The outcome will be wasted time, money, and trust. The true power of AI lies in the quality of the data it’s given.

At Flyaps, we understand the critical role data plays for AI. We’ve witnessed numerous cases in the industry where the lack of quality data blocked the realization of brilliant ideas. At the same time, we’ve seen how strategic data preparation and governance helped bring AI projects to success – whether building AI Agent solutions or innovative extensions. Take, for instance, the case of GlossaryTech extension and CV Compiler, where we assembled the right generative AI roles and successfully managed the data aspect to create innovative AI-driven solutions for our clients.

In this article, we’ll share our expertise on how to prepare your data for an AI project so it drives accurate insights and meaningful outcomes.

Practical tips for efficient AI data preparation

Every decision an AI-driven product makes, every prediction it offers, stems from the patterns and insights it uncovers within the vast sea of data it has been exposed to. But how to prepare your data so that your business takes most of it? Here are the crucial steps to make sure your data is accurate, reliable, and fits your project goals.

| Efficient data preparation process | ||||

|

|

|

|

|

Define clear goals

Before diving into the data preparation process, identify your project goals. Define specific business challenges or opportunities you intend to address. With clear goals underlined, you’ll be able to come up with a roadmap for effective implementation.

And don’t focus on collecting tons of data. What really matters for your AI project is the data quality, not quantity.

Curate diverse data

Gather a diverse set of raw data relevant to your business objectives. Ensure that your dataset covers a variety of scenarios and contexts. This way, the generative AI model can learn from diverse situations and generate realistic outputs.

For example, an e-commerce platform needs diverse data, including customer purchase history, browsing behavior, and demographic information. With such a varied dataset, their machine learning model can understand different customer preferences and provide personalized and contextually relevant product recommendations.

Check out our skills and let’s find the right approach together.

Let’s collaboratePrioritize data quality

Once again, for AI data preparation, quality triumphs over quantity. Use tools for data profiling, cleansing, validation, and monitoring to refine your data. For example, a provider of healthcare-oriented software may employ data profiling and cleansing tools to make sure that patient records are accurate and error-free. This commitment to data quality not only enhances the reliability of the AI model’s diagnostic insights but also aligns with the broader generative AI value chain, where data integrity plays a foundational role.

Integrate data sources

Integrate the raw data gathered from various sources and formats to create a unified view. It improves accessibility, enriches data attributes, and minimizes silos and inconsistencies. Techniques like data extraction, transformation, loading, and synchronization aid in seamlessly integrating diverse datasets.

For instance, an enterprise resource planning (ERP) software provider can integrate data from various departments so that their generative AI model can access and analyze a comprehensive dataset, enriching data attributes and reducing inconsistencies across financial, inventory, and customer management systems.

Automate data pipelines

When raw data is manually gathered from different sources, it can lead to delays, inconsistencies, and human mistakes. For example, data experts might miss important data points or input incorrect information, causing gaps in the dataset. To mitigate these risks and speed up the process, you should automate data preparation and the data pipeline too.

With automation in place, the system identifies, collects, and stores relevant data without human intervention. The entire process becomes more efficient and scalable while your AI models always work with accurate data.

Label your data

Labeling data means adding annotations or tags to make it understandable for machine learning algorithms. This step is crucial for training and evaluating AI models, refining data features, and optimizing performance. To label data, data engineers and data scientists use such techniques as data annotation, classification, segmentation, and verification.

Imagine you’re building an AI-based marketing automation tool. You need to label customer data with relevant tags, such as purchasing behavior, preferred channels, and response to promotions. The labeled dataset helps the generative AI model create targeted and effective marketing campaigns while you get promotional content that speaks to the right people. This is just one example of how generative AI use cases are transforming industries - from marketing and customer service to healthcare and design.

Secure your data

Establish stringent data security measures to safeguard sensitive information. It might cover encryption, access controls, anonymization, consent management, regular audits, and other security protocols to protect your data from unauthorized access.

Let’s say you’re dealing with customer health data for an AI-driven wellness app. When preparing data, you’d encrypt all personal information, set up strict access controls, and anonymize any sensitive data when using it for model training. Apart from protecting private patients’ data, it helps you keep compliant with privacy regulations and build customer trust.

Establish data governance

Formulate and implement policies, processes, roles, and metrics to manage data throughout its lifecycle. Data governance aligns data quality, integration, labeling, augmentation, and privacy with AI objectives, standards, and best practices. Use frameworks and platforms like data strategy, stewardship, catalog, lineage, and AI development platforms to establish effective data governance processes.

For example, for an AI-driven product in the insurance field, establishing data governance policies is crucial for managing customer data throughout its lifecycle. This governance framework aligns with the organization’s AI objectives, ensuring that data quality, integration, labeling, and privacy practices adhere to industry standards and best practices.

Regularly update your dataset

The business landscape is dynamic, and so should be your dataset. Constantly update your data to incorporate new information and adapt to evolving business needs and trends. This will guarantee your generative AI model remains relevant and effective over time.

For a finance software provider, for instance, systematically updating market data for their AI-driven investment tools will let them have generative AI models that provide relevant and effective insights for investors.

Handle missing data

Implement strategies to address missing data, such as calculating replacement values based on statistical methods or deleting records with missing values. It will help you improve data consistency and enhance the performance of your AI models.

A telecommunications company, for example, can address missing data in customer profiles by calculating replacement values based on statistical methods. This approach can help the company ensure that customer data for predictive analytics remains comprehensive and reliable.

Following these principles will streamline your AI data preparation process, but there are some general tips to make raw data AI-ready. Now, we will take a closer look at specific steps to achieve proper data preparation and build a solid data foundation. In other words, let’s break down exactly what you need to do with your data. Step by step.

Data preparation for AI or what to do with your data

We all know there are dos and don’ts when prepping data for AI. But where do you begin? Without a clear, step-by-step approach, it can be confusing. Let’s go through the process of data preparation so you know what to do, from start to finish.

Step 1. Data collection

First things first, you need to collect a variety of raw data relevant to your case. The goal here is to give your AI model enough information to learn from. Not any data, but that is representative and unbiased. In plain language, this very data should cover all the relevant scenarios, variations, and groups that the AI model will encounter in the real world and should not unfairly favor or disadvantage any particular group, outcome, or pattern.

Step 2. Data cleaning

Next, you’ve got to clean up your data. Fix missing values, get rid of weird outliers, and resolve any inconsistencies. Once done, your data will be much more reliable and ready for analysis. Unstructured data can lead to poor model performance, unreliable results, and more work in the long run.

Step 3. Data transformation

Here, you’ll need to standardize and encode your data so that AI algorithms can make sense of it and recognize patterns. Standardization means converting data into a consistent format, like scaling numerical values to a similar range. For example, scaling all values between 0 and 1. Encoding refers to turning categorical data, such as colors or labels, into numerical values that the AI can process. It’s like translating your data into a language the AI understands.

Step 4. Data reduction

At this step, you should remove any duplicates and balance the data to speed up processing and prevent bias. This step is important to prevent the situation when AI is swayed by repeated or unbalanced info. For example, if you have way more data from one group than another, the AI could learn to make predictions based on the dominant group, ignoring smaller but more important groups.

Step 5. Data validation

Data validation is the final check before you start training the AI model. Here, the task is to verify that your data meets certain quality standards, like whether it’s consistent, free from errors, and follows the right format.

You’ll also have to verify that all necessary features are included and that no data is missing or corrupted. Otherwise, you will train your model on faulty data, which can lead to inaccurate predictions or even failure to perform well in real-world situations. But that’s not the only risk you will face when your data sets are not ready for AI. Below, we will discover some other, more harmful ones.

Poor data preparation, poor results

Missing even one of the data preparation steps could lead to serious problems you’ll want to avoid. Here’s what can happen if you don’t have robust data preparation processes in place.

| The cost of weak data preparation | ||||

| 💸 Costly mistakes and delays | ❌ Inaccurate recommendations | 🤷♂️ Irrelevant guidance | ||

| 🔒 Security risks | 🚨 Operational inefficiencies | 🗑️ Wasted resources | ||

Costly mistakes and delays

Without a solid data foundation, AI projects might end up with financial expenses you didn’t plan and unnecessary delays. Inaccurate data leads to poor model performance and you will need to invest extra time and resources to fix errors, adjust the model, and rerun tests. No surprise it’ll increase costs and push back project timelines.

For example, think of a financial institution implementing an AI-driven fraud detection system that relies on inaccurate historical transaction data. When the model fails to accurately identify fraudulent activities, it causes delayed responses and significant financial losses. Or let’s take a look at a real-world example.

Amazon developed an AI tool to automate CV screening, but it was trained on past hiring data that favored men. The system started penalizing resumes that contained the word “women”, and ranked female candidates lower. As a result, Amazon scrapped the project after wasting years of development and untold amounts of money.

Inaccurate recommendations

Poor-quality data foundation for AI can be the reason why the model generates wrong recommendations. Whether it’s suggesting products to users or making decisions based on faulty data, the outcomes may fall short of expectations and user needs.

Imagine an e-commerce platform that uses flawed customer preference data. Instead of recommending products people want, the system suggests random, irrelevant items. Frustrated customers lose interest, and their experience suffers. As a result, sales drop, and the platform starts losing customer trust.

Irrelevant guidance

When data is outdated, the guidance provided by AI systems may become pointless in the current context. This can impact the applicability and effectiveness of AI-driven solutions.

For instance, let’s say a supply chain management company employs an AI system for inventory forecasting. However, if the data used for forecasting lacks relevance, the system may recommend stock levels based on older trends, leading to overstocking or stockouts and disrupting operational efficiency.

Security risks

Inadequate data preparation might introduce security risks. Flawed data causes vulnerabilities in AI systems, compromises sensitive information, and exposes organizations to security threats.

For instance, if an autonomous vehicle manufacturer incorporates AI algorithms trained on compromised sensor data, the manufactured car could misinterpret its surroundings, make bad driving decisions, and put people at risk. Like what happened to Boeing.

Boeing’s Maneuvering Characteristics Augmentation System (MCAS) was designed to automatically adjust a plane’s nose position, but it relied on a single faulty sensor. When that sensor fed bad data to the AI, the system mistakenly forced the plane into a nosedive. This flaw led to two tragic crashes, Lion Air Flight 610 in 2018 and Ethiopian Airlines Flight 302 in 2019, killing 346 people. The fallout was massive: Boeing faced over $20 billion in lawsuits, settlements, and fines, and the 737 MAX was grounded worldwide for nearly two years.

Operational inefficiencies

One of the most obvious consequences is probably operational inefficiencies. Low-quality data brings inaccurate insights and inefficient workflows. Tasks take longer, errors pile up, and teams spend more time fixing problems instead of focusing on innovation and growth.

For example, a manufacturing company implements AI-driven predictive maintenance based on incomplete equipment sensor data. The AI system fails to predict maintenance needs precisely, resulting in unplanned downtime, increased maintenance costs, and disruptions to production schedules.

Wasted resources

Lastly, investing time and resources in training AI models with inconsistent data can be counterproductive. The results might be wasted efforts and resources, with the need for reiterations and corrections to address the shortcomings in the data.

Let’s suppose a technology firm invested resources in developing an AI Chatbot for customer support. However, if the training data is of low quality, the chatbot may provide incorrect information. It’s obvious customers won’t be happy with that, and the company will have to pour in even more resources to fix and improve.

If you’ve made it this far, you won’t debate that AI projects can only succeed with well-prepared data. If it’s prepared poorly, the whole project can fall apart. But that doesn’t mean you should avoid AI altogether. Partner with a reliable AI development company like Flyaps, and you’ll have nothing to worry about.

How Flyaps can help you form a solid data foundation for AI

At Flyaps, we have over 10 years of experience bringing business ideas to life with our tech expertise. Here’s our approach to preparing a data foundation for AI implementation.

- We begin by conducting a comprehensive analysis of your data needs, identifying potential challenges, and formulating a tailored strategy. With a keen eye on data quality, we employ advanced techniques for profiling, cleansing, and validation, ensuring that your dataset is accurate and reliable.

- Our team incorporates DevOps practices to enhance the efficiency of the development process. Continuous integration, deployment, and automation ensure a seamless workflow, allowing for quick adjustments based on evolving data requirements. This agility is crucial for adapting to the dynamic nature of AI projects.

- The inclusion of our data engineering specialists further strengthens the data foundation. Our experts design and implement robust data architectures, integrating diverse sources and optimizing data pipelines. Their focus on security and scalability ensures that the data infrastructure can support the unique demands of AI algorithms.

Want to create a well-organized, high-quality data foundation? Our team of skilled DevOps and data engineering specialists will build a strong data foundation specifically designed for your AI needs. Contact us to kickstart the process!

AI can do more for your business—let’s find the best way to make it work for you. Check out our expertise and let’s discuss your next AI project.

See our AI services