LLM vs Generative AI: Rivals, Partners, or Something in Between?

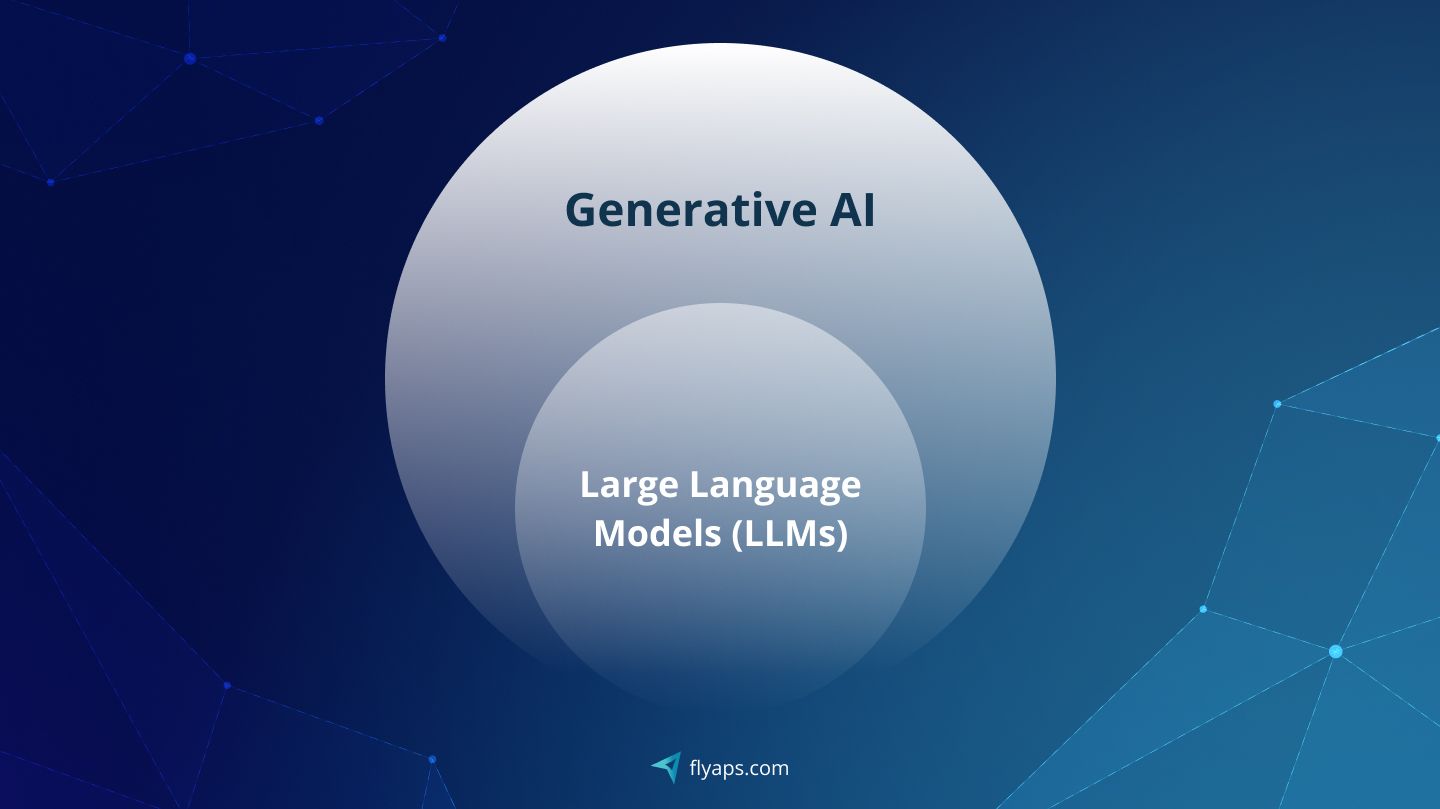

Let’s get the basics straight. Generative AI (GenAI) is the broad technology that creates new content based on what it has learned from existing data. Large Language Models (LLMs), on the other hand, are one branch of the GenAI family tree, built to understand and generate human language.

That’s why comparing generative AI and large language models is a bit like comparing cars to vehicles: one is part of the other. But we won’t get stuck on that technicality, because the discussion is about something different. It’s about relevance. Do you need the full creative spectrum of generative language models, or the sharp text-focused capabilities of an LLM?

Eleven years in, we at Flyaps have implemented both LLMs and generative AI, working with clients from different industries, which include recruitment, logistics, and the public sector. From our experience, many business owners mistake GenAI for LLMs or mix them. So we will explain the real difference between LLM and generative AI in more detail and provide some relevant examples, so you don’t waste time and resources on the wrong tech.

TL;DR

| → Generative AI is a broad technology that creates new content, like text, images, audio, video, or code, based on existing data. |

| → LLMs are a subset of GenAI focused on understanding and generating human language. |

| → The key difference between generative AI and LLM: GenAI handles multiple data types; LLMs are text-centric. |

| → Both LLMs and GenAI are used across industries like healthcare, finance, law, telecom, and more. |

| → Choosing between GenAI and LLM affects your workflows, infrastructure, budget, and ROI. |

Are LLMs generative AI? Let’s clear it out once and for all

Yes, LLMs are generative AI. But no, generative AI isn’t limited to LLMs. To keep it simple, we’ll review large language models vs generative AI, and start with the latter, as it is a broader category.

Generative language models, explained… Again

The Internet is flooded with LLM vs Gen AI explainers, yet acronyms like AI, GenAI, and LLM often confuse more than they clarify. That’s why it makes sense to break down these concepts one by one, so you actually get the ideas right.

Generative AI, or Gen AI, is AI that creates new content instead of just analyzing it. It can spit out text, images, music, videos, and code. Generative AI tools can be built on LLMs and trained on large datasets to better understand human input and provide more accurate results. But this isn’t always the case.

Here are a few popular examples of generative AI to see how it works.

DALL·E

DALL·E is a popular generative AI platform that creates images from user prompts. You describe the image you want, and DALL·E generates it. The technology relies on large language models, which help it interpret user input. But that’s a topic we’ll explore later.

Now, let’s focus on DALL·E’s pros and cons. It can transform a simple prompt into a striking image in seconds, but vague or unclear prompts may lead to off-target results. It can also reflect biases in its training data and requires significant computing power.

| DALL·E: Quick pros & cons | |

| 🖼️ Text-to-image creation | ⚠️ Bias & nuance issues |

| 🎨 High creativity & variety | ⚠️ Resource-intensive |

| ✨ Customizable styles | |

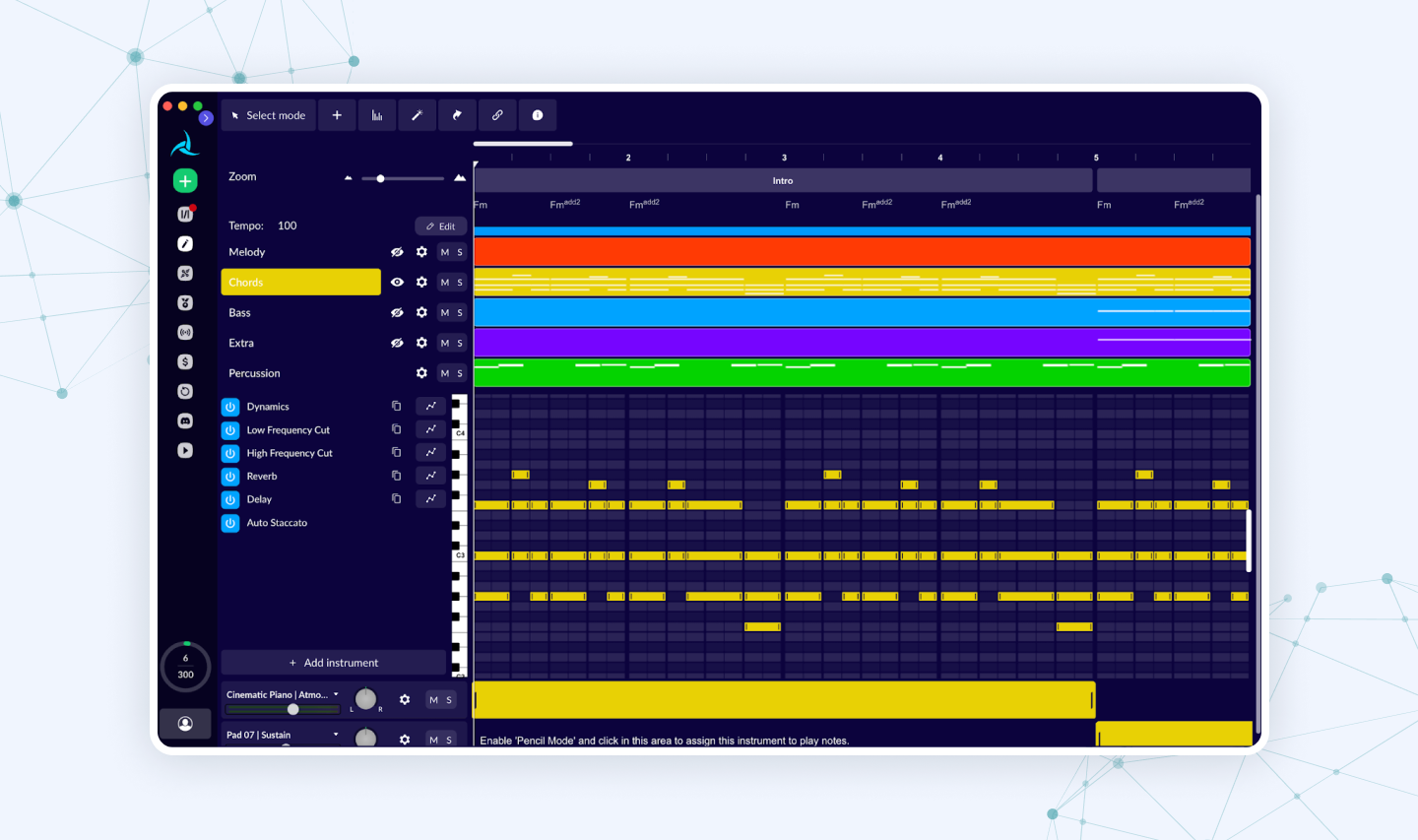

AIVA

AIVA is a generative AI tool for creating music. It analyzes patterns and structures in existing music to compose new pieces and write lyrics without human involvement.

When you need music quickly, AIVA is your go-to. You can generate tracks in minutes, adjust style, tempo, and instruments to fit your project. But mind that AI-generated music may lack the emotional depth of human compositions and sometimes produce pieces that resemble existing works. Additionally, if you want to own the copyright for your tracks, a free plan is not enough.

| AIVA: Quick pros & cons | |

| ⚡ Fast music production | 😐 Lacks emotional depth |

| 🎛️ Ultimate customizability | 🔁 Might sound similar to prior works |

| 🎼 Wide range of music styles | ⚠️ Licensing limitations |

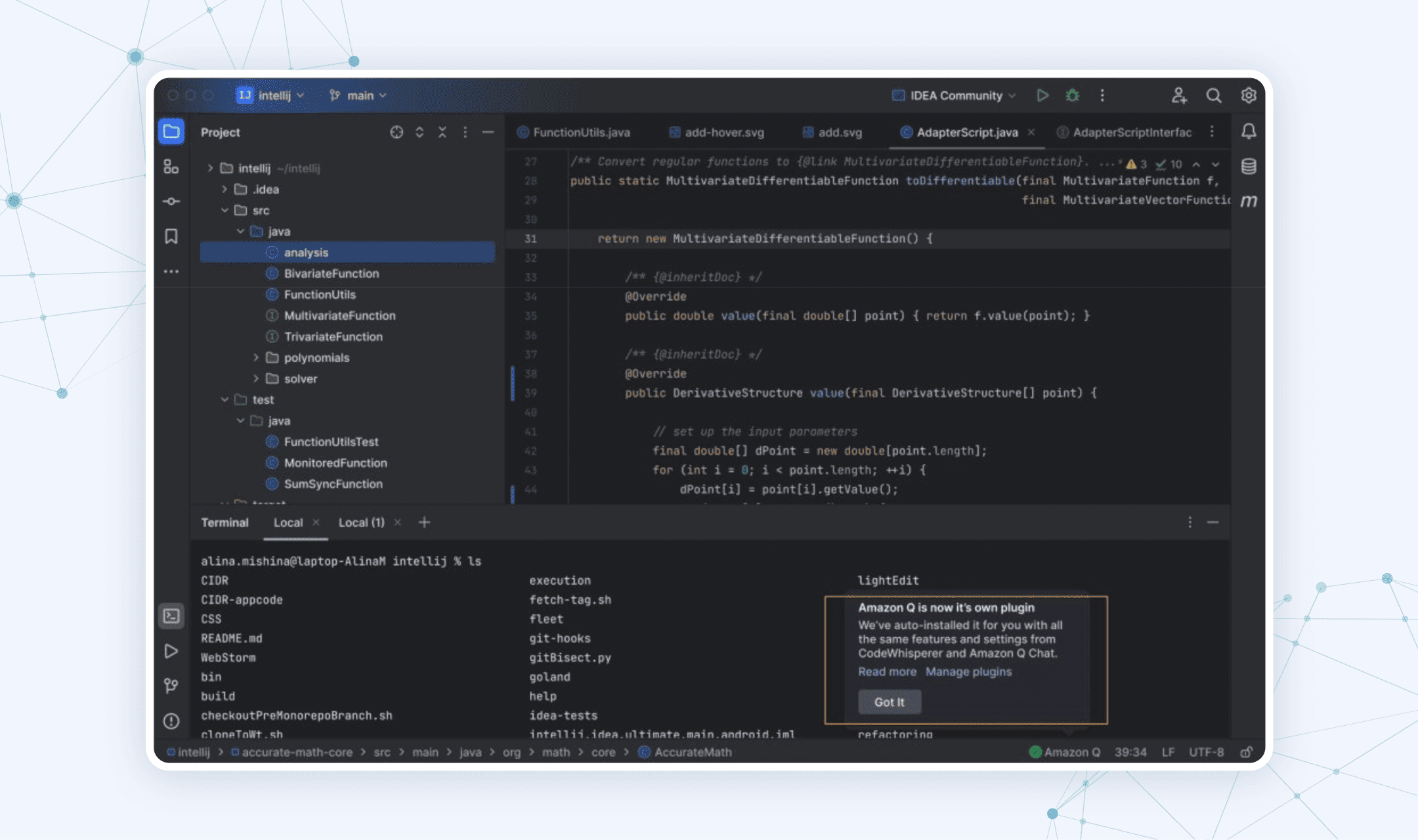

Amazon Q Developer

Q Developer is a generative AI assistant that supports developers throughout the software development lifecycle. It reads your code, understands your comments, and can whip up code snippets, functions, unit tests, or even documentation. All based on what you type or ask it to do.

With Q Developer, you can type what you want in plain English, and it’ll generate code snippets, functions, or even full modules for you. For example, you could ask it to “build an SMS system for delivery alerts,” and it’ll give you a working plan and code. It works right inside popular IDEs like VS Code, JetBrains, and Visual Studio, or even from the command line. But it’s built for AWS, so it works best if you’re in that ecosystem.

| Q Developer: Quick pros & cons | |

| 📝 Natural Language code generation | 🔗 Dependency on AWS ecosystem |

| ✅ Automated code reviews | |

| 🛠️ Seamless integration | |

Of course, this list can go on. But instead of reviewing yet another generative AI model from the crowd, we’ll analyze one of our cases. We have plenty of customizable AI-based solutions with advanced machine learning models and algorithms in our portfolio.

Let’s get started and discuss your next Gen AI project right now.

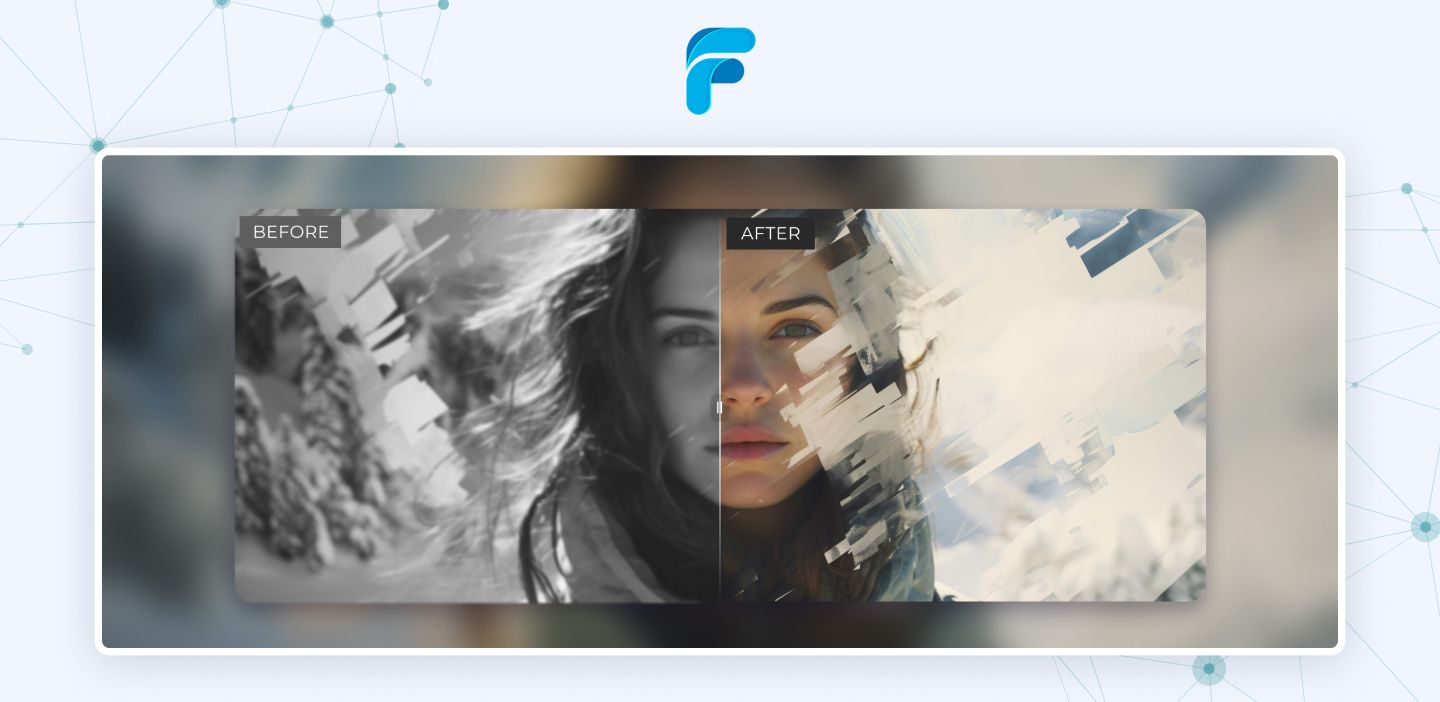

Explore our AI servicesCase in point: transforming images with gen AI filters

Flyaps team built a solution that boosts users’ creativity with AI-powered filters. First, we collected and curated a large image dataset from multiple sources. Using this data, we designed a generative AI model that detects hidden patterns and connections within the dataset. Then, we trained the model to emulate techniques, like pencil drawing, so it can produce distinct, personalized effects.

With the model in place, we wrapped it in practical tools: it can restyle an image while keeping its original structure, adjust lighting, color, and shape, and upscale resolution without losing detail. It also handles restoration tasks, like filling gaps, rebuilding backgrounds and missing pixels, and repairing damaged or torn photos, so results look clean and natural.

Now that generative AI is more or less clear, let’s shift the focus to LLMs.

LLMs: AI that talks (and writes) back

Large language models, or LLMs, are a subset of generative AI focused specifically on analyzing and generating text-based data. These models are trained on a large dataset (that’s where their name comes from) and use deep learning techniques, which involve specialized neural networks called transformers. Transformers allow LLMs to interpret the texts they’re trained on and generate new ones, mimicking the tone and style.

Though LLM is a narrower concept than generative AI, its range of applications is pretty extensive, from DNA research and sentiment analysis to online search and AI chatbots/AI agents. But we will talk more about this a bit later. Now, let’s look at some of the most interesting examples of large language models.

GPT

OpenAI is dropping new models so fast that it’s difficult to keep up. Right now, the frontier includes GPT-4.1, GPT-5, GPT-5 mini, and GPT-5 nano. The mini and nano versions are lighter, faster variants of GPT-5, so we’ll skip them for now. Let’s overview GPT-4.1 and GPT-5.

| GPT-4.1 vs GPT-5 | ||

| GPT-4.1 | GPT-5 | |

| Quick description | Reliable, multimodal workhorse. Stronger reasoning than GPT-4o, but cheaper than GPT-5. | The flagship model: best reasoning, consistency, and multimodal capabilities. Handles very large contexts. |

| Best fit for | Enterprises and devs who need stable, affordable AI for analysis, reports, coding, and general productivity. | Mission-critical tasks, like research, advanced coding, creative production, and high-stakes enterprise work. |

| Price/1M tokens | Input $2 Output $8 | Input $1.25 Output $10.00 |

| Release date | April 2025 | August 2025 |

GPT models are amazing at generating human-like text, automating tasks, and aiding creativity, but they’re not flawless. Mistakes, biases, and gaps in understanding still happen, so humans need to keep an eye on them.

| GPT models: Quick pros & cons | |

| 🗣️ Natural Language understanding & generation | ⚠️ Accuracy issues |

| 🌐 Knowledge across domains | ⚠️ Absence of true reasoning |

| 👥 Multi-user accessibility | |

Claude

Claude is a family of large language models developed by Anthropic. It can write, analyze, translate, code, reason, and even understand images. Like GPT, it has several iterations, and the latest are Claude Opus 4.1 and Claude Sonnet 4.

In brief, if your tasks demand intensive computation and extended reasoning capabilities, Claude Opus 4.1 is the optimal choice. For more straightforward applications requiring quick responses, Sonnet 4 would be more suitable.

Claude models prioritize ethics and safety, steering clear of harmful or biased content. Thanks to extended context windows, they can handle long conversations and documents without losing track. Just note: they may stumble on niche or highly specialized topics, offering less depth in those areas.

| Claude models: Quick pros & cons | |

| 🛡️ Safety-focused design | ⚠️ Limited knowledge of niche subjects |

| 📝 High-quality language generation | ⚠️ Usage limits |

| 🖇️ Long context window | |

Gemini

Gemini is an advanced AI model developed by Google DeepMind. It’s Google’s answer to GPT and Claude, a state-of-the-art AI LLM with multimodal capabilities. It can handle reasoning, coding, summarization, translation, and other complex tasks. Gemini is integrated into Google Search, Gmail, Docs, and Pixel devices, and is also available via apps and APIs for developers.

Like all large language models, Gemini can sometimes provide inaccurate or misleading responses. Without clear instructions, the outputs may be less effective or require multiple attempts to get the desired outcome.

| Gemini: Quick pros & cons | |

| 🌐 Integration with the Google ecosystem | ⚠️ Potential for hallucinations |

| ✨ Content generation & creativity | ⚠️ Dependence on prompts |

| 🔗 Multimodality | |

But the world of LLMs isn’t just about GPT, Claude, or Gemini. There’s also Grok, Llama, Qwen, you name it. And every company keeps pumping out new models, each one trying to outpace the others. Honestly, this race doesn’t seem to have an end in sight. But one thing is clear: these LLMs are the backbone of generative AI. They help AI make sense of text, which it can then turn into images, videos, or code.

Gen AI vs LLM: How can they differ when one is part of the other?

The difference between generative AI and large language models becomes obvious when you look at implementation. That’s why, when you choose GenAI vs LLM for your business, you should also think about what kind of data you’ve got, the infrastructure you need, the skills on your team, and the costs. In short, you should find the best tool for your job. As you weigh the pros and cons of generative AI vs LLMs, keep these aspects in mind:

| Business use cases | |

| GenAI | LLM |

| Multi-modal applications: content creation (text, image, video), customer engagement, marketing, product design, and simulations. Broad use across departments. | Primarily text-centric applications: chatbots, document summarization, coding assistance, knowledge management, and analytics. Often integrated into existing workflows. |

| Operational differences | |

| GenAI | LLM |

| Handles multiple data types (text, images, audio, and video) simultaneously. Often requires orchestration across models and pipelines. | Focused on natural language understanding and generation. Operationally simpler in scope, but can be compute-intensive for large deployments. |

| Setup/infrastructure differences | |

| GenAI | LLM |

| May require more complex infrastructure: GPU clusters, model orchestration, multi-modal pipelines, APIs for different media types. | Infrastructure is generally optimized for text processing: CPU/GPU clusters, memory-heavy architectures, often deployable via APIs or containerized environments. |

| Data/training needs | |

| GenAI | LLM |

| Requires diverse datasets spanning multiple modalities (text, images, audio, video). Fine-tuning is often domain-specific. | Primarily large text corpora. Fine-tuning is usually text-focused, such as domain-specific documents or code repositories. |

| Development expertise | |

| GenAI | LLM |

| Needs multi-disciplinary teams: ML engineers, data scientists, domain experts for images/audio/video, prompt engineers, and integration specialists. | Requires NLP expertise, prompt engineering, data preprocessing skills, and understanding of LLM architectures and limitations. |

| Cost & ROI considerations | |

| GenAI | LLM |

| Higher setup and operational costs due to multi-modal support and infrastructure. ROI comes from wide-ranging automation, content creation, and innovation potential. | Lower operational costs compared to full GenAI, especially for text-focused apps. ROI comes from knowledge automation, coding productivity, and conversational AI. |

Technical stuff and costs matter when you assess LLMs vs generative AI, but what also counts is whether the solution fits your industry. There is no need to force GenAI into your workflow if all you really need is an LLM, or vice versa.

Here are some popular LLM and generative AI use cases in different domains so you could get a better sense of how they work in practice.

Generative AI vs LLM across different industries

Generative AI use cases often steal the spotlight since they cover a wide range of tasks. But LLMs have plenty of practical applications, too. Here’s how you can use generative AI and LLMs for your business needs.

| LLM vs GenAI use cases | ||

| GenAI | LLM | |

| Healthcare |

|

|

| Finance |

|

|

| Law |

|

|

| Customer service |

|

|

| Content creation |

|

|

| Entertainment |

|

|

| Telecom |

|

|

Generative AI vs large language models? The question is still open

Large language model vs generative AI? If that’s the decision on your plate, know it’s not a technical detail. It has a direct impact on your workflows, infrastructure, and ultimately the ROI of your AI initiatives.

Look through the checklists to find out where you should start with AI.

Choose LLM if:

✅ Your workflows revolve around text (documents, reports, emails, code).

✅ You need automation for knowledge management, summarization, or chatbots.

✅ Your budget is limited, and you want lower operational costs.

✅ You need quick deployment with fewer infrastructure demands.

✅ Your team has expertise in NLP and prompt engineering rather than multi-modal AI.

Choose generative AI models if:

✅ Your business involves multiple data types (text, images, audio, video).

✅ You want to generate rich content, such as visuals, simulations, or multimedia.

✅ Industry use cases demand more than text, like healthcare imaging, manufacturing prototypes, or marketing campaigns.

✅ You’re ready to invest in stronger infrastructure and bigger budgets.

✅ You have (or can build) a multi-disciplinary team with expertise beyond NLP.

Final thoughts on LLMs and generative AI

Generative AI can create diverse content, but it always needs clear instructions. With DALL·E, for instance, users describe the desired image. This is where large language models and generative AI work well together. LLMs interpret context and meaning across languages, enhancing user input.

To build a solution using generative AI or LLM, partner with an experienced software development team like Flyaps. If you’re wondering about the budget required, check out our guide on how much AI costs in 2025.

With expertise in generative AI models, we’ll help you choose the right approach and manage the technical details. Contact us, and we’ll handle the rest.

In 3 months, we’ll create a working PoC to test feasibility, minimize risks, and guide your next steps.

Let’s talkStill have questions on generative AI models?

Can LLMs handle languages other than English?

Yes. Many modern LLMs, including GPT, Claude, and Gemini, are trained on multilingual datasets, enabling them to perform language translation and other language-related tasks. However, their proficiency may vary depending on the language and the size of the training data for that language.

How do generative AI and LLMs deal with biased or inappropriate content?

Both generative AI and LLMs can inherit biases from their training data. Developers implement safeguards like content filters, ethical guidelines, and reinforcement learning from human feedback (RLHF) to minimize harmful outputs. Still, human oversight is crucial, especially in sensitive domains like healthcare, law, or finance.

Do I need a powerful GPU for using generative AI tools or LLMs?

It depends. Large-scale training or fine-tuning of generative AI systems and LLMs often requires GPU clusters. However, many businesses use cloud-based APIs from OpenAI, Anthropic, or Google, which offload computation. For inference and small-scale deployments, a high-end GPU may be sufficient, but often CPU-based systems can handle lightweight models like GPT-5 mini or nano.

Can generative AI models create content without LLMs?

Yes. Not all generative artificial intelligence relies on LLMs. For example, image generation GANs or music-focused AI can generate content directly from non-textual datasets. LLMs mainly add value when supporting natural language processing, guiding textual data prompts, or combining multimodal outputs.

How do I measure ROI when investing in LLMs or generative AI models?

ROI can be measured through productivity gains, cost savings, quality improvement, or revenue generation. For LLMs, metrics often include time saved on text generation, text data processing, automated customer support, or code generation tools. For generative AI systems, it could be faster product design, more engaging marketing materials, or reduced manual labor in simulations and creative workflows.