Prompt Engineering for Project Managers: How to Make an LLM Your Work Assistant

One thing that probably became the new norm is for the new LLM to release almost every single day. You go to LinkedIn or scroll through TechCrunch and then boom – you see another LLM announcement, which is faster, smarter and can make you even more productive. Just what you’re looking for, right?

As a PM working for a сompany that develops production-ready AI solutions, I learned to take such news with a grain of salt. I mean, of course GPT-5 is smarter than GPT-3.5, but there’s still no universal LLM that will dethrone the rest and become the number one choice for everyone. But I do believe that if you want to thrive in today’s competitive world, you’ve got to befriend AI and have it as your extra pair of hands.

So let me tell you how it can be done the right way if you’re a PM like myself, and why it’s not exclusively a programmer's shtick. But you might be wondering, why do PMs need LLMs in the first place? Let’s start from there.

TL;DR: Prompt engineering for PMs explained and how to master it

| LLMs won’t replace PMs – they’re power assistants if you prompt them well. |

| Use structured prompts (RTF/CREATE): define the role, task, format; add examples and constraints when needed. |

| Safely delegate: drafting BRDs/user stories/use cases, diffing docs/CSVs, generating Jira tickets, and simplifying tech explanations. |

| Guard privacy, verify outputs, and split big asks into smaller ones—don’t overload the model. |

| The PM role is evolving into “AI-assisted PM”: those who master these workflows gain a durable edge. |

Why Project Managers should team up with AI

A good PM is someone who always wants to get better and do their job faster without compromising quality. But sadly, PMs can’t escape dealing with routine tasks, which can take a lot of time and energy. That’s where the problem lies.

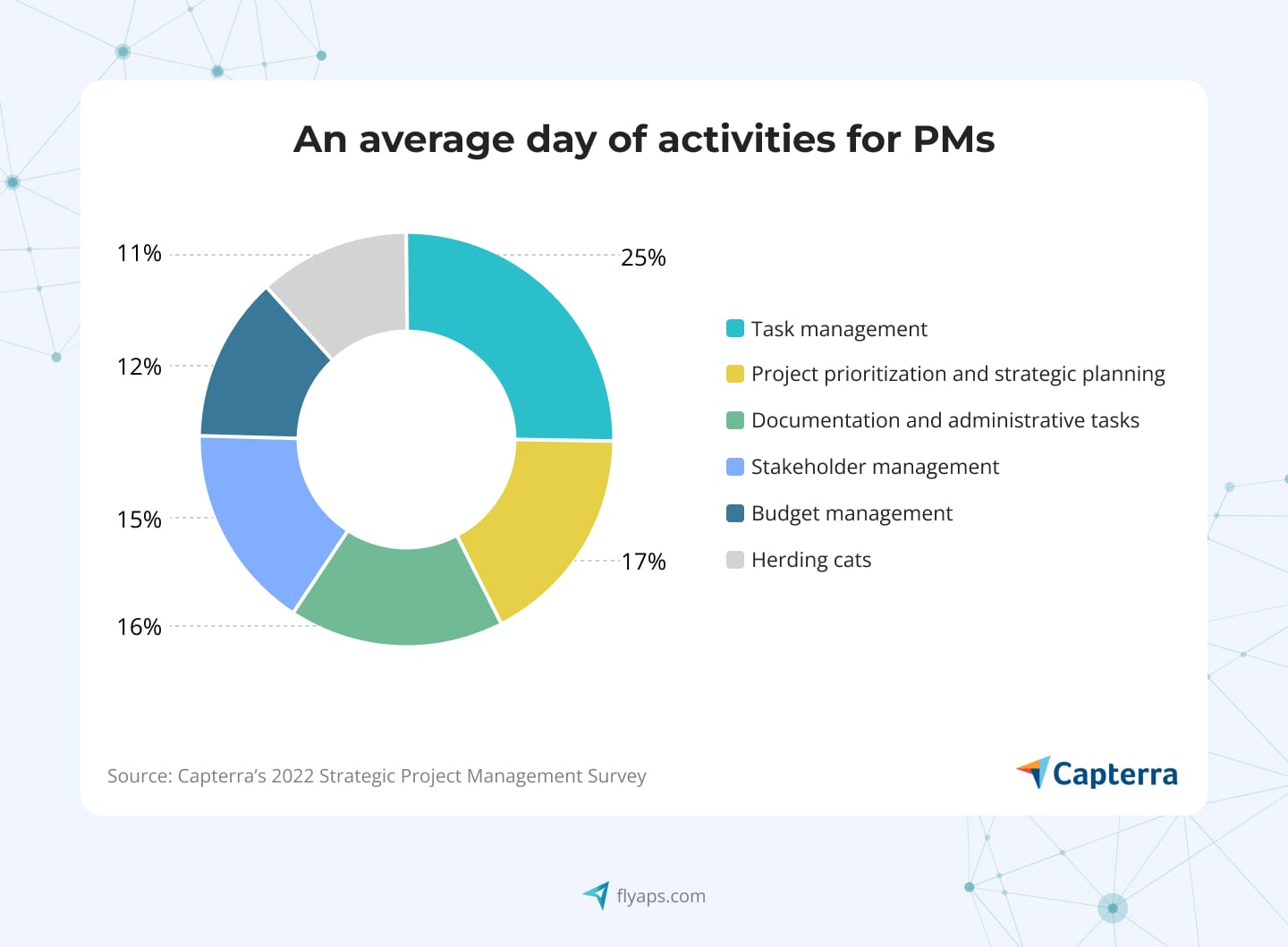

Modern PMs spend more than 40% of the time dealing with project or administrative tasks. And yes, sometimes your head can explode from those. So a logical question pops up: can you delegate some of those tasks to AI?

“Delegate” doesn’t imply that AI will do the job better than you. For example, if your parents ask you to help them do the dishes, it doesn’t mean they couldn’t do it without you; maybe they’re just tired or too busy (or maybe it’s your responsibility). The IT industry works in a similar fashion – experienced developers often delegate some tasks to the “young ones”, so that they can focus on more complex tasks.

That said, PMs can delegate some of their tasks to LLMs. But I already hear the arguments like “AI gives wrong answers” or “LLMs hallucinate, they’re useless”. And they will be right. To some extent, because what makes LLMs hallucinate is the quality of the prompt it receives. Programmers, on the other hand, have learned to overcome this challenge, so let’s see how they did it and why PMs can do this, too.

How programmers tame LLMs and why not only programmers can automate their tasks with AI

Why aren't there many successful use cases of PMs automating their tasks with LLMs, in the first place? In my opinion, it’s just because the articles you find online are focused specifically on prompt engineering for coding as there’s a high interest. That’s why you can find tons of articles where devs share their experience of using AI for helping them write code. Even our team wrote an article about prompt engineering tools, so the hype is definitely there.

So sadly (or luckily for this article), prompt engineering for PMs remains underexplained, with little to no articles where you get an explanation on how to engineer prompts. You can find a list of AI prompts for project engineers, but it won’t cut it. And this article aims to fix it.

Fact is, LLMs are also trained on huge volumes of business texts and can grasp managerial context, not just code. They can analyze requirements, draft documentation, assist with communications, and support strategic decisions. In short, LLMs are becoming a PM’s new “virtual assistant.”

So if you want LLMs to work with you, not against you, you need to learn how to write good prompts. Next up, I will be describing how I managed to do that, so you won’t have to make the same mistakes I did. And as a token of gratitude, I will also leave some prompts for project managers to use, so you’re welcome.

Ways to perform prompt engineering for project managers to enhance their work processes

To level up your AI prompts and your effectiveness as a PM overall, you need to start by giving it its main objective and describe who it is…

Tell AI who it is

No, I’m not joking. AI doesn’t know who it’s supposed to be when it prepares a response to your prompt. Not because it lacks data on how to be someone specific, but because it can be anyone. So to get the result you want, you have to tell it who it is. You probably don’t want your tasks to be advised by “an experienced fisherman” or “an up and coming fashion enthusiast who is also a technophobe”, right?

When you tell AI who it is, don’t forget these points:

- Specify its role/title

- State the years of experience

- Note the specialization details it has dealt with

- Tell AI it's actually good at this :)

Let me explain the last point if you’re a bit confused. When you first interact with AI, it can feel like you’re dealing with a toddler. Miss even the tiniest detail in your task description, and the result may be nothing like what you expected.

I can recall one video in this regard, where a father asked his son to write instructions on “how to make a peanut butter and jelly sandwich.” The whole process turned out pretty funny, though I’m sure when the kid was writing, nothing seemed problematic to him.

For example, one step said: “Get some jelly, rub it on the other half of the bread.” He picked up the jar of jelly and started rubbing it on the bread. The son immediately shouted, “No, Dad, open the jelly,” and got the logical reply, “It doesn’t say to do that.”

That’s exactly how AI behaves when you give imprecise instructions – it acts very literally and can still give a bad output even though you specified the role and mentioned exposure to various sub-tasks. So you need to encourage AI, give it this metaphorical pat on the back here and there, since this way it will get it’s on the right track (and you will avoid mediocre outputs as a result).

But this isn’t the only rule to follow, it’s just one part of a better structure for working with AI.

How to structure your idea (and your AI prompts)

There are two ways to proceed: create a simple RTF template or a more comprehensive CREATE template. Let’s cover both of them.

Simple RTF template

We’re lucky that AI has been a trend for more than a year now and we don’t have to re-invent the wheel in many cases (although there’s still a lot of room for improvement). To structure prompts, there are tried-and-true templates that help you build requests so AI delivers clearer, better results.

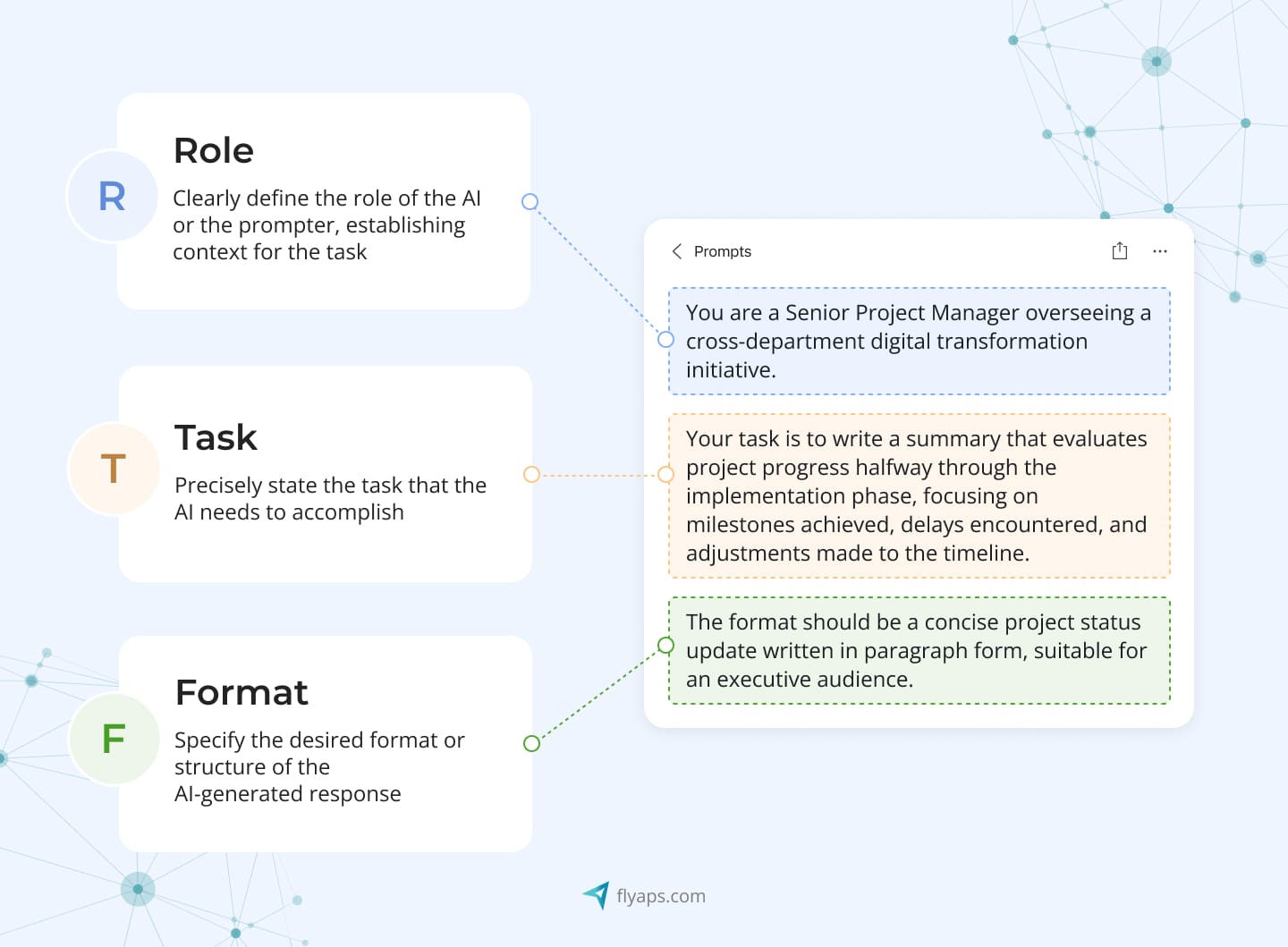

The simplest template is RTF (Role–Task–Format). We’ve already discussed why the Role part matters and what it should include. But again: you need to clearly define the AI’s role and provide role-specific context for the current task.

Task is the body of your prompt. This is where you write all the what, how, and why. Describe the task in great detail (keep that PB&J video in mind) to get an equally detailed answer. But leave one question for the final block, which is “in what form?” Sometimes you expect a nicely structured table with data precisely matching column names, but sometimes AI can reply with one long paragraph. In that case, don’t forget to spell out the required format for the AI’s output.

Comprehensive CREATE template

Stands for Character–Request–Examples–Adjustments & Constraints–Types of output–Evaluation & Steps. This one’s a more serious “constructor” with twice as many building blocks. But half of them we already covered, so no need to worry. Character is the same as Role, so define the persona and do it as precisely as possible. Request maps to Task in RTF, and Types of Output = Format. Now let’s cover the new blocks that CREATE adds.

Examples are one of the core blocks of a prompt – and also the most controversial.

| Pros | Cons |

| Delivers the biggest impact on the AI’s accuracy. | Because AI relies heavily on examples, this doesn’t fit tasks where you’re unsure what the outcome should look like. |

| Sets a strong pattern for long-running tasks in one chat and minimizes hallucinations. | Best for larger tasks — on many small ones, writing and structuring an example can be almost the same as doing the manual work. |

If you need to add something to the main request or specify constraints, CREATE is a go-to template for that. For a beginner, that’s usually enough, but for the best results you should also provide relevant examples or context to guide the understanding of the task.

How do you choose the right template for a given prompt?

We looked at two of the most common templates, but there are many more and the list keeps growing. Other popular templates often useful for Project Manager prompts include:

- SCARF (Status, Challenges, Actions, Results, Feelings)

- PEAR (Problem, Experience, Action, Result)

- STAR (Situation, Task, Action, Result)

The best way to find a fitting template is to try several ones on the exact same task. Even if the result looks fine, try one more and you might get something even better. Remember: a different template might fit each task better than the one you typically use elsewhere.

And once you get a better feel for where and for which tasks each template works best for you, remember this line that can save you loads of time:

“While advanced templates (like CREATE and more detailed ones) can improve AI’s answers, sometimes you don’t need them – an even less detailed prompt can be enough for your task, and you simply save time on execution.”

My point is, you don’t need the CREATE template for a request like “Highlight the key points in this paragraph” or “Write HTML for a 3×3 table.” Aim to simplify your approach instead of overcomplicating what already works.

Which daily processes have I been able to delegate to AI?

Though, as I said above, it’s best to tailor prompts and AI usage to your personal workflow, some of my processes or templates might resonate – or at least spark ideas. So here are some of the tasks I tend to delegate to AI quite often.

- Creating and editing BRDs, User Stories, Use Cases.

If the project isn’t new to you and you generally understand what’s going on, try using AI to draft these documents. Make sure you provide the required file structure and the basis on which the AI should organize the document. Here’s an example of a prompt for generating a business requirements document:

You are a senior Business Analyst and Project Documentation Specialist with over 20 years of experience. You write formal, concise, and structured business requirements documents used in corporate software development. You automatically infer missing information logically from context.

Create a complete BRD based on plain-text input from the user. The BRD must look like a professionally formatted corporate document with standard section headings, tables, and consistent layout.

[Paste your desired table/document structure here, making it look like this:]

Section – Example Content (What to insert)

Project Name – [Insert project or system name]

Style: formal, corporate, precise. Use plain English, no filler or emotion. Generate missing details logically (assumed roles, sample requirements). Keep the total document under ~3 pages of text. Maintain consistent formatting, spacing, and capitalization. Output only the BRD text – no explanations or commentary.

Primary output: Fully formatted BRD in markdown or table-ready text.

Read the user’s input. Identify project name, purpose, key actors, and actions. Infer logical requirements and structure. Generate a professional BRD with all standard sections. Review for formatting consistency and factual clarity.

- Quick comparison of document versions.

A dreadful manual task: finding differences between two file versions or a missing attribute in an updated CSV versus an older one. Nothing beats AI at this. By the way, here’s the prompt I regularly use for this task:

You are an expert document analyst trained to detect and explain differences between two text versions with precision and clarity. I will provide you with two versions of a document: Version A (the original) and Version B (the updated one). Your tasks are:

Compare both versions line by line and paragraph by paragraph. Identify all differences, including:

• Added content

• Removed content

• Reworded or modified sentences

• Structural changes (reordering, heading changes, etc.)

Summarize the differences in a clear and organized table, with columns:

• Type of Change (Added / Removed / Modified / Reordered)

• Location / Section

• Original Text (from Version A)

• Updated Text (from Version B)

Keep the analysis objective, detailed, and formatted for easy review by an editor or project manager. When ready, ask me to upload or paste Version A and Version B.

- Creating a Jira task description in based on the provided input

AI has been a real lifesaver for me here. My method for solid tickets: record my personal vision of the task and what needs to be done → transcribe the audio → feed it to AI with the right prompt → voilà, you’ve saved time for more important work. Here’s an example prompt using the RTF template:

You are a professional Project Manager with 20 years of experience in IT project delivery and cross-functional communication. You are skilled at translating business or technical requirements into clear, actionable Jira tasks tailored to each specialist’s role.

Create a Jira task description in English based on the provided input. Your goal is to present and explain the task to a developer/team member in the clearest, most structured way possible. Ensure the description includes all relevant context, objectives, technical details, and acceptance criteria so the DevOps engineer fully understands the scope, purpose, and expected deliverables.

The output must follow this structure:

• The Jira description must begin with a clear, concise task title that summarizes the goal in one line.

• The main body should include a detailed explanation of the purpose, context, and background of the task so the DevOps developer fully understands why it’s needed.

• It should list acceptance criteria as bullet points describing what must be true for the task to be considered complete.

• It must specify deliverables that outline exactly what outcomes or artifacts are expected from the DevOps developer.

Use formal, concise, and structured language suitable for professional task documentation. The final Jira description should be clear enough to be directly copied into a real Jira ticket without modification.

- Explaining technical aspects

For non-technical Project Managers who moved into IT from other fields (or for anyone who feels short on technical knowledge) AI can be a great helper. The Internet is full of explanations, but they’re not always easy to understand. In those cases, I use the “explain it to a 5-year-old” technique, and AI will put it in simple language a seasoned Reddit programmer might not.

You are a kind and patient teacher who knows how to explain things to a 5-year-old child. You use simple words, short sentences, and fun examples.

Take any topic or question and explain it so a 5-year-old can understand. Make it sound friendly, easy, and interesting — like telling a short story or talking to a curious kid.

Format:

• Use simple, clear language.

• Keep sentences short.

• Add a fun or relatable example.

• Keep a warm and friendly tone.

• Avoid technical or difficult words.

Key things to remember when you want to try prompt engineering project management

Rule number one: ChatGPT isn’t your personal notes app.

This note would probably sit under almost every paragraph of this article, but I’ve pulled it into a separate section. No matter how great your AI experience is, data privacy remains crucial: don’t upload anything under NDA or client data directly. If you’re unsure how to properly anonymize information or replace sensitive details with examples, templates, and placeholders—handle confidential work manually. Before uploading files as examples for AI, make sure they don’t contain data that shouldn’t go into a chat.

Avoid sharing personal data, be it yours or anyone else’s (passport details, phone numbers, addresses, passwords).The same goes for confidential project information, such as clients’ full names, and company names (yours and theirs). Remember: AI isn’t your private server; it’s a tool that analyzes and learns from user queries, so treat it like a public service. Likewise, when discussing sensitive topics, don’t forget to create a private chat so it isn’t indexed by Google.

Trust, but double-check

For the third time I say this, but to avoid AI hallucinations you need highly detailed, well-structured prompts. However, there will be exceptions. AI can make mistakes in any case, and your job as a responsible user is to remember to verify the results. Even ChatGPT will remind you about this if you forget.

Automation is a hefty tool, but it’s not a replacement for human oversight. Even the most accurate models can invent facts, mix up sources, or distort data. The balance between trusting AI and personal responsibility is to use it as an assistant, not a final source of truth. Always double-check conclusions, especially when real decisions, money, or reputation are on the line.

Don’t overload AI

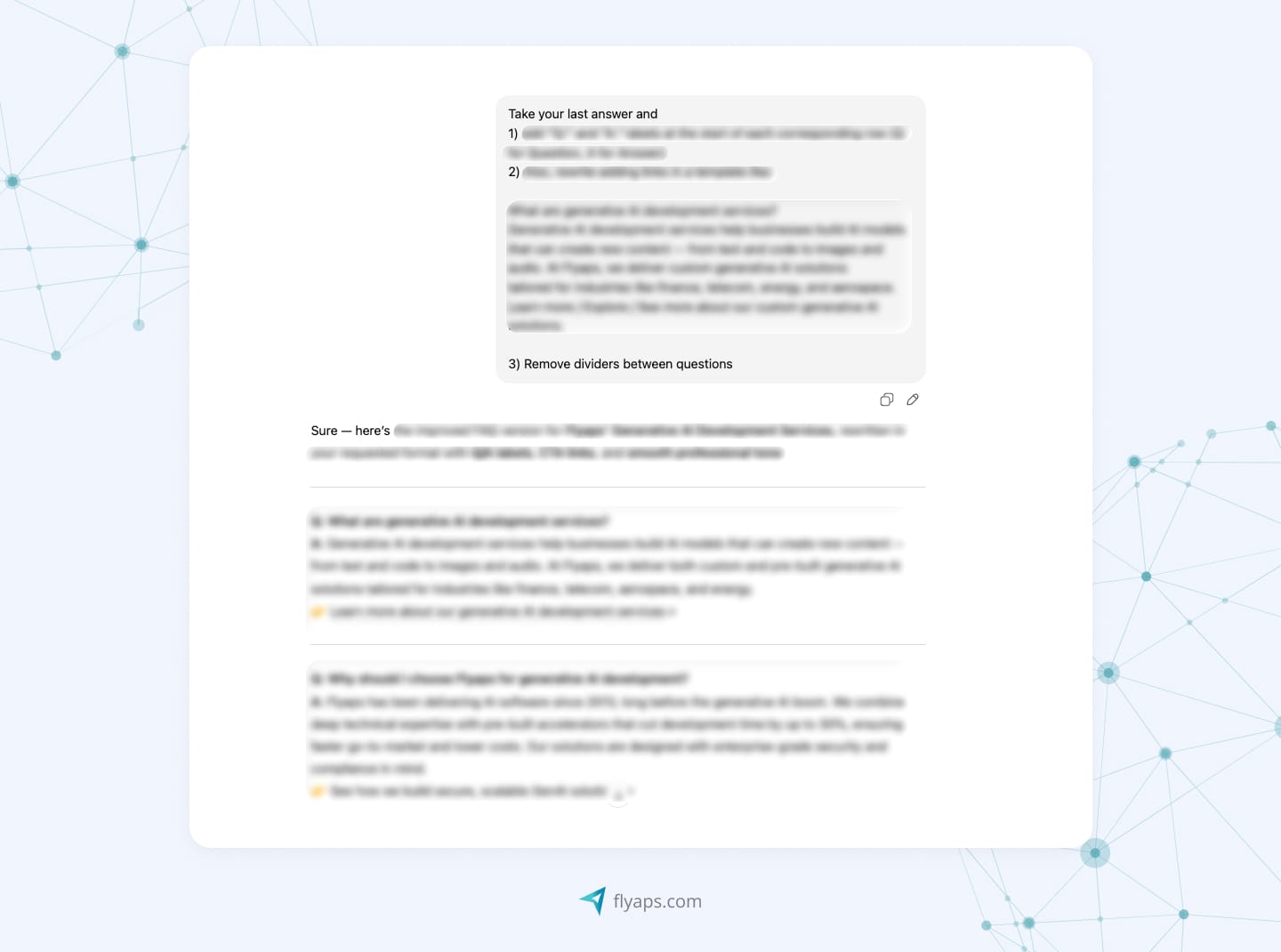

Even when AI is genuinely helping you, remember this “machine” isn’t omnipresent or all-powerful. It has many limitations you can run into, so it’s better to increase volume and complexity gradually. From personal experience, it’s best to split large prompts into several smaller ones; otherwise the AI may simply ignore part of your request.

For example, I asked AI to modify its previous answer by adding points 1, 2, and 3. It implemented 1 and 2, but apparently forgot point 3 (“Remove dividers between questions”). The takeaway: AI isn’t great at multitasking within a single prompt, so better to split it up.

The future of prompt engineering for Project Managers a

Today, the PM role is changing, and of course AI acts as the fuel. If a PM used to act mainly as a task coordinator and a go-between for the team and the client, now they’re becoming an architect of AI-powered solutions. Project management is less about mechanical control and more about designing effective systems of interaction between people and technologies.

LLMs strengthen the analytical and strategic side of the profession. They help collect requirements faster, compile reports, analyze risks, and even model project scenarios—freeing up more time for tasks that can’t be delegated.

My forecast for the next 3-5 years: the “AI-assisted PM” will become the IT standard, a specialist who isn’t afraid to delegate routine to algorithms to focus on creative and managerial work, where human intelligence remains irreplaceable.

Bottom line

AI’s main role isn’t to replace the Project Manager, but to free them from mechanical tasks so they can do real thinking. Even today, LLMs can save dozens of hours per month by automating routine, from documentation to data analysis.

PMs who learn to use AI tools competently will gain a strong competitive advantage. And, more importantly, you don’t need years of experience to start. On the contrary, AI helps you master professional processes faster by providing examples, templates, and best practices right in the flow of work. And the best part? If you need some ChatGPT prompts for project managers, you can ask ChatGPT directly (just be specific)

The future of project management is a mix of human and artificial intelligence. Those who use AI not as a replacement but as a partner are headed for a new era of efficiency and intentional leadership.