GDPR and AI: How to Develop Compliant AI Products for Modern Businesses

No modern technology is as data-dependent as AI. AI technology is evolving at a rapid pace, making lawmakers constantly think of new data protection rules and update compliance regulations to better protect user data from potential threats. The legal landscape for AI is always shifting, while regulations themselves are not set in stone.

As an AI-focused software development company, we at Flyaps see how challenging the legal aspects can be for businesses using AI-powered solutions or looking to develop one. They must navigate the ever-changing regulations while ensuring their AI systems remain compliant and safe. In this article, we will discuss the requirements and regulations imposed on data collection, processing, and storage, as well as practical strategies for developing compliant AI solutions. Let’s start with the key laws you should take into account.

The key AI regulations

There are three main laws companies must consider when developing AI solutions: the General Data Protection Regulation (GDPR), the AI Act, and the California Consumer Privacy Act (CCPA). These laws are implemented and enforced in different regions, but regardless of where your product is developed, it must comply with the laws of the regions where it is used, not just where it was created. We strongly advise against ignoring any of the laws listed below. With that said, let’s see what they look like.

General Data Protection Regulation (GDPR)

The GDPR is a set of laws and regulations implemented by the EU on May 25, 2018. This legal framework establishes clear guidelines on how organizations should collect, process, and store users’ personal data. The law applies to you no matter where you are located if you handle the personal data of EU residents.

The core idea of the GDPR is that organizations must have clear consent from people to use their data and provide them with information on how exactly the data will be used. People have a right to request the deletion of their data. Those who use and store data must set robust security measures to protect user data from breaches and unauthorized access.

AI Act

Another set of rules was introduced by the EU, but this time they were designed to regulate AI specifically. The law splits AI systems into four categories based on the potential risk they pose:

- Unacceptable risk. Such systems are prohibited because they can cause significant harm. Examples include AI systems used for social scoring (rating people based on their behavior) and manipulative techniques that can distort human behavior.

- High-risk AI systems. These systems are heavily regulated. They must meet strict requirements because they can significantly impact people’s lives, like AI in medical devices, autonomous vehicles, or systems used in hiring processes.

- Limited risk. These systems have lighter obligations. For instance, AI chatbots and deepfakes need to be transparent about being AI-driven so users know they are not interacting with a human.

- Minimal risk. Most AI applications, like spam filters and AI in video games, fall under this category and are not regulated under the AI Act because they pose little to no risk.

AI can do more for your business—let’s find the best way to make it work for you. Check out our expertise and let’s discuss your next AI project.

See our AI servicesMost of the AI Act's rules apply to high-risk AI systems. Providers of these products must:

- Implement a comprehensive risk management system that operates throughout the entire lifecycle of the AI system, from the design phase to post-deployment.

- Use high-quality, representative, and error-free data for training, validation, and testing of the AI system.

- Create thorough technical documentation detailing how the AI system works and its intended use.

- Design the AI system in such a way that it automatically records events and changes that are relevant for identifying and managing risks. Additionally, providers must ensure the system allows for human oversight, meaning that a human can monitor and intervene in the system's operations as needed.

- Ensure the AI system is designed and tested to perform its tasks correctly under a variety of conditions and be protected against security threats.

- Establish a quality management system that oversees compliance with the AI Act.

The AI Act also mentions general purpose AI (GPAI) – AI systems like GPT-4 that can handle a variety of tasks instead of just being single-task-focused. Since these systems are versatile and can be integrated into many different applications, they need special regulations. Providers of these systems must keep detailed records about how the AI works, respect copyright laws by ensuring the data they use is legally obtained, and provide clear summaries about the data used for AI training.

Even open-source GPAI models, need to follow some rules, like sharing information about their training data. If a GPAI system uses a lot of computing power and could pose bigger risks, it has to go through extra checks to ensure it doesn't harm users or society.

California Consumer Privacy Act (CCPA)

The CCPA is California's state-level privacy law, often called the “California GDPR.” It regulates how businesses handle the personal information of California residents.

As with GDPR, Californians have the right to know what personal data is collected about them, how it's used, and opt out of sharing it. However, there are some differences. GDPR requires explicit consent before collecting personal data, following an “opt-in” model. In contrast, CCPA allows data collection by default but gives consumers the right to opt out.

Now, let’s talk a bit more about whether compliance is really so necessary.

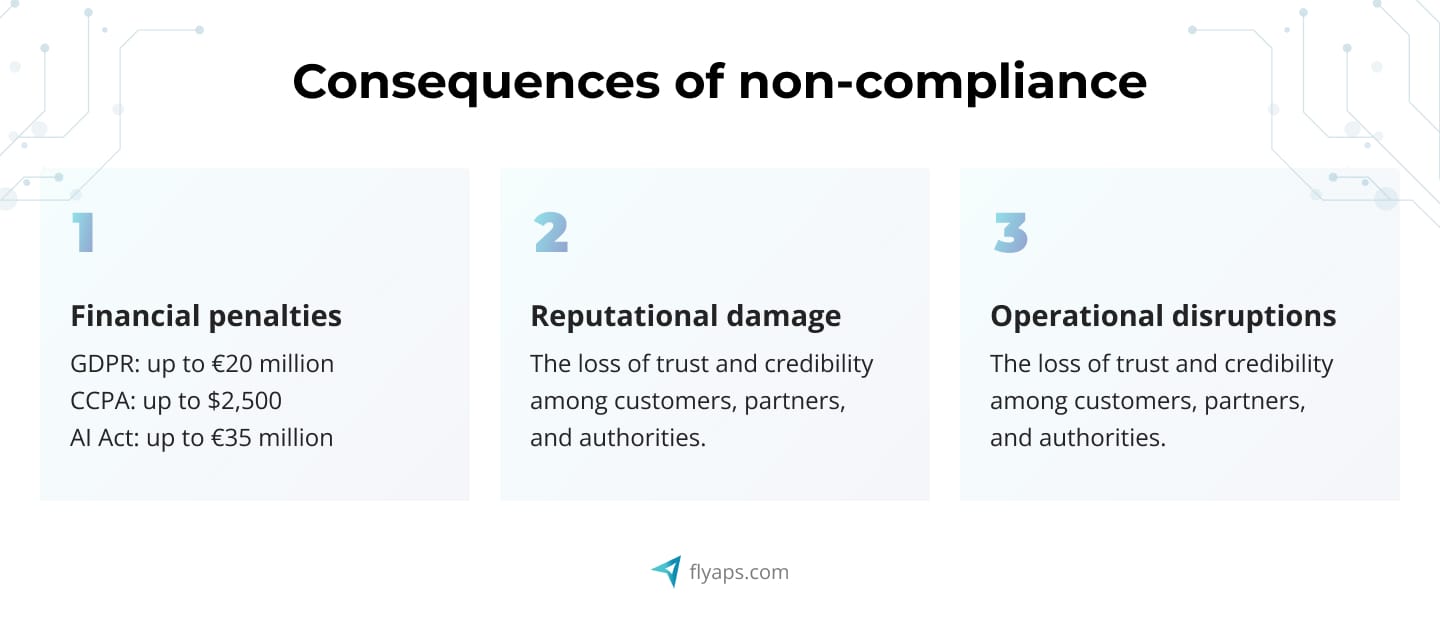

Consequences of non-compliance

Fines are just the tip of the iceberg when it comes to ignoring data privacy laws and AI regulations. But let's kick things off with them.

Financial penalties

Non-compliance with GDPR can lead to severe fines. Just look at Meta. Out of the top 20 GDPR fines ever recorded, seven hit Meta or its companies. Meta alone got slapped with a record-breaking fine of over €1.2 billion! In total, GDPR fines have now soared past €4 billion.

The CCPA isn't messing around with fines either, although they're not as sky-high as the GDPR's. If a company unintentionally slips up, it could be looking at a fine of up to $2,500 per breach. But if they deliberately violate the CCPA, that fine can shoot up to $7,500 per breach.

When it comes to the AI Act, if you don't play by the rules with certain AI practices, you could be hit with a whopping fine of up to €35 million or 7% of your company's annual turnover, whichever is higher. Even for other less severe violations, you’re still looking at fines up to €15 million or 3% of your annual turnover.

Reputational damage

Ignoring data privacy laws like GDPR can really hurt your company's reputation. Just look at OpenAI's ChatGPT. Recently, the privacy rights group noyb filed a complaint against it, accusing OpenAI of violating GDPR rules. One notable instance was when ChatGPT wrongly declared journalist Tony Polanco to be deceased, an error that persisted for over a year. This raises concerns about trust and reliability, making users and businesses wary of adopting the technology.

Operational disruptions

Addressing compliance failures often means implementing corrective measures and responding to investigations and audits. This can be a time-consuming process that can pull staff away from their regular duties and disrupt everyday operations. Organizations may also face more frequent audits and inspections from regulatory authorities, leading to ongoing pressure and additional compliance costs.

Now that we discussed the risks, let’s talk about ways to avoid them.

Principles for developing GDPR-friendly AI products

Data protection regulations can seem daunting, so here we'll discuss the key principles that successful companies are implementing to ensure the AI products they build and use are compliant.

Fairness and non-discrimination

Imagine an AI system used in a hiring process that inadvertently favors certain demographics over others. To comply with regulations, companies must regularly audit their AI models, not just the data they use but also the algorithms themselves.

This is especially critical when building a complex AI Agent that makes autonomous decisions, as the potential for unintended bias is significantly higher without rigorous oversight.

For example, when building a CV Compiler, an ML-based resume parser, we used carefully selected data to train the ML algorithm on what an ideal resume should look like. This data was based on diverse sources, including the book “The Google Resume" by Gayle Laakmann McDowell and practical advice from specialists in HR and recruitment. This way, the tool's suggestions are based on objective criteria from well-respected sources and industry standards. By focusing on specific, measurable attributes of resumes, we minimized the risk of subjective or biased recommendations.

Purpose limitation and data minimization

Let's say a healthcare AI collects patient data for diagnostic purposes. To adhere to data protection laws, organizations should limit the data they collect to only what's necessary for the AI's task. Moreover, it’s advisory not only to protect an organization from legal consequences but also to stay safe from different kinds of digital risks like sensitive information disclosure. Techniques like generative adversarial networks (GANs) can be used to create synthetic data, reducing the need for extensive personal information. Similarly, federated learning allows AI models to learn from data stored on local devices without transmitting sensitive information to a central server, thus minimizing privacy risks.

Interested in how to safeguard your LLM -driven system from digital threats? All the details you can find in our dedicated article “ Unveiling the Top 10 LLM Security Risks: Real Examples and Effective Solutions .”

Transparency and explainability

At Flyaps, we believe that transparency and explainability are the cornerstones of ethical AI. Users should be able to understand how AI decisions affect them. For example, a recommendation system suggesting products based on personal data should disclose clearly how it reaches those conclusions. We advocate for technologies that provide explanations for AI decisions, such as showing which data points were influential in a decision, which are crucial for transparency.

Privacy by design and default

Consider a scenario where an AI-powered service collects user data. By integrating privacy considerations from the outset of development, such as conducting privacy impact assessments early on, companies can ensure that data handling complies with regulations. Keeping detailed records of compliance efforts also demonstrates accountability in case of audits or inquiries.

Automated decision-making

Let’s say a loan approval AI that automatically decides on creditworthiness. If this decision significantly impacts individuals, like denying a loan, users must be informed about the decision's rationale and have recourse to contest it. This ensures fairness and accountability in automated processes governed by legal standards.

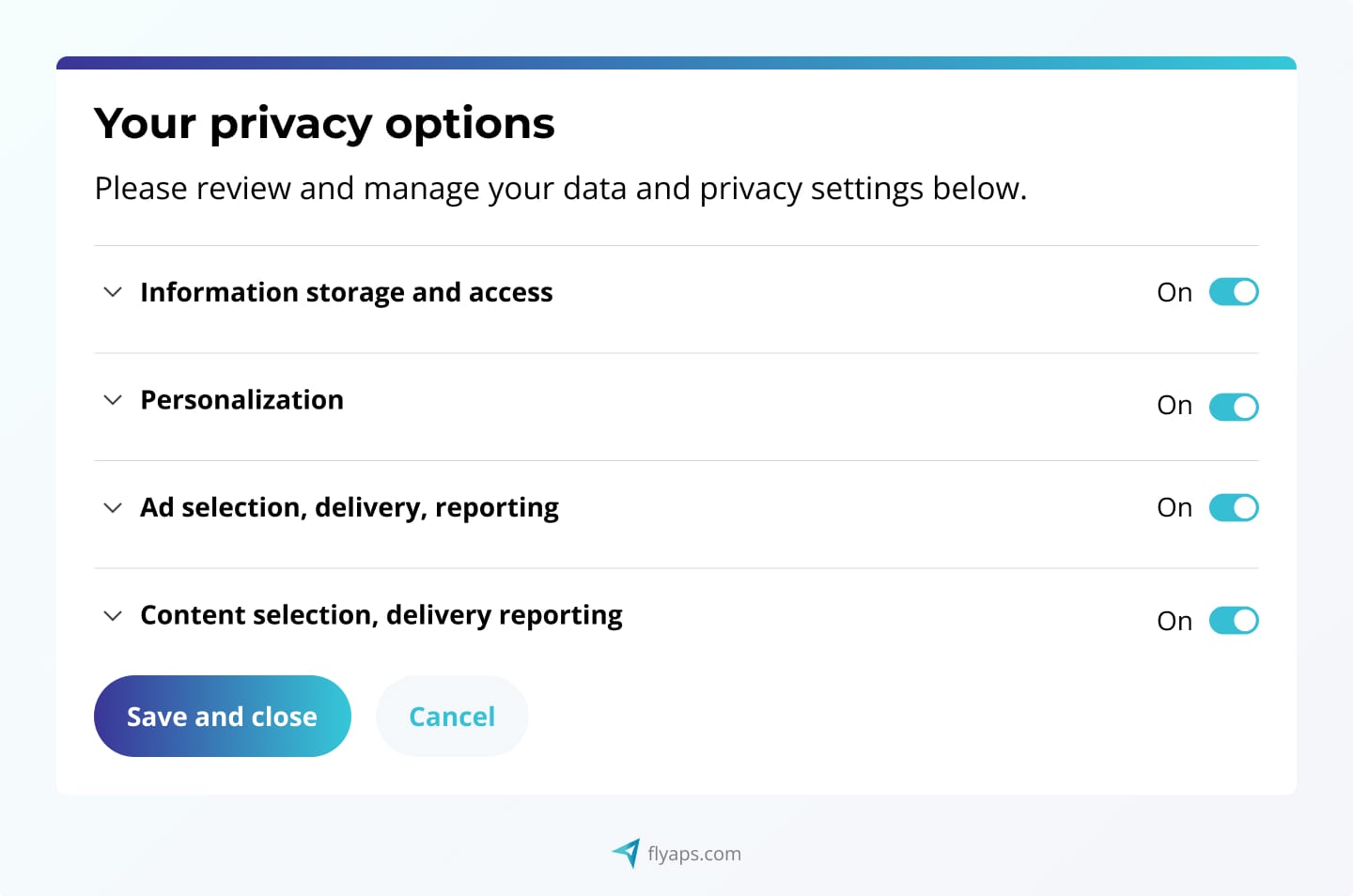

Consent management

For this principle, let’s imagine your company wants to implement consent management practices. What you should do is provide a clear and user-friendly interface explaining data collection purposes, offer granular consent options, allow users to manage and withdraw consent easily, and maintain detailed consent records.

Every limitation opens a door to innovation

These days, society is thrilled with the capabilities of ChatGPT. On the other hand, the concerns about gen AI’s impact on issues like global warming or the ethics behind its decisions make people anxious, too. Such fluctuations affect trust, trust affects investments, and investments — innovations. That's why the fact that we have laws aiming to create a fair and safe environment for innovation is great.

Moreover, the AI Act, for example, isn’t just about restrictions. It also offers opportunities for start-ups and small and medium-sized businesses to develop and train AI models before their release to the general public. It mandates that national authorities provide companies with a testing environment that simulates real-world conditions, fostering innovation while ensuring safety and fairness.

Broader adoption of AI technologies depends on a solid regulatory framework that reassures users and investors about the safety, fairness, and ethical standards of AI applications. By providing clear guidelines and support for innovation, regulations help build a sustainable and trustworthy AI ecosystem, ultimately accelerating technological advancements and societal benefits.

Why hire Flyaps for legally safe AI integration

Ensuring legal compliance is a lot easier when you have a trusted partner with experience in balancing both innovations and legal safety. Here are a few reasons why Flyaps can be such a partner for you:

1. Seasoned experts in AI and compliance

With over 11 years in the AI game, we've seen how laws like GDPR have evolved, making us a perfect match for keeping your projects compliant with latest legal standards.

We've got pre-built AI tools tailored not only to different industries but also designed to meet the highest standards of data protection and ethical practices.

3. Track record of success in regulated industries

We have a wealth of successful cases in highly regulated industries like telecom. Our experience ensures that our solutions comply not only with international laws but also with specific industry standards.

Looking for a team of AI-focused developers experienced in generative AI use cases to help implement AI into your processes in compliance with the latest regulations? Drop us a line!

Skip the endless hiring cycles. Scale your team fast with AI/ML specialists who delivered for Indeed, Orange, and Rakuten.

Scale your team